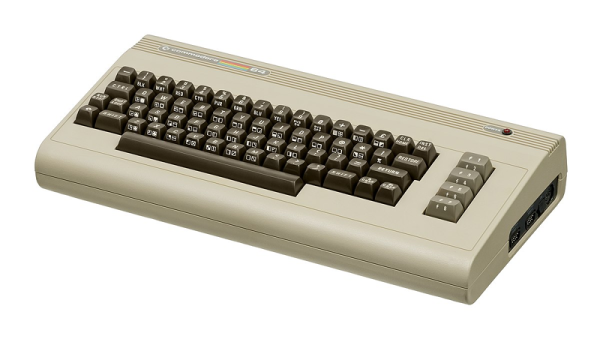

The Sinclair ZX Spectrum+2 was the first home computer released by Amstrad after buying up Sinclair. It’s basically a Sinclair ZX Spectrum 128, but with a proper keyboard and a built-in tape drive. The one that [Mark] of the Mend it Mark YouTube channel got in for repair is however very much dead. Upon first inspection of the PCB, it was obvious that someone had been in there before, replacing the 7805 voltage regulator and some work on other parts as well, which was promising. After what seemed like an easy fix with a broken joint on the 9 VDC input jack, the video output was however garbled, leading to the real fault analysis.

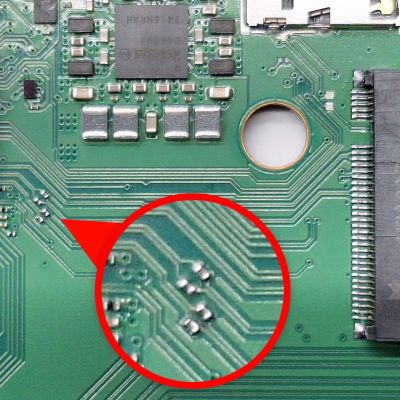

Fortunately these systems have full schematics available, allowing for easy probing on the address and data lines. Based on this the Z80 CPU was swapped out to eliminate a range of possibilities, but this changed nothing with the symptoms, and a diagnostic ROM cartridge didn’t even boot. Replacing a DS74LS157 multiplexer and trying different RAM chips also made no difference. This still left an array of options on what could be wrong.

Tracking down one short with an IC seemed to be a break, but the video output remained garbled, leaving the exciting possibility of multiple faults remaining. This pattern continues for most of the rest of the video, as through a slow process of elimination the bugs are all hunted down and eliminated, leaving a revived Spectrum+2 (and working tape drive) in its wake, as well as the realization that even with all through-hole parts and full schematics, troubleshooting can still be a royal pain.

Continue reading “Fixing 1986 Sinclair Spectrum+2 With A High-Score Of Issues”