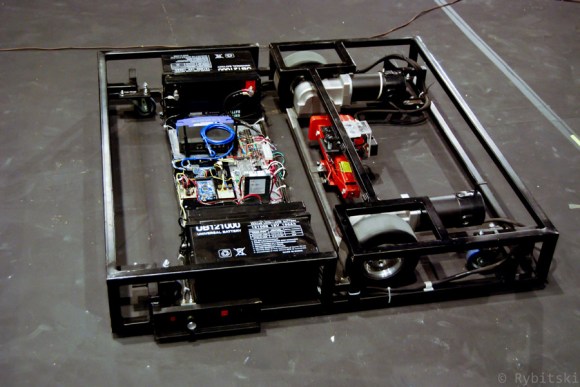

We love a good line-following robot project and this really hits the spot. It’s got sharp edges, gobs of solder bridging, and look at all those jumper wires! Despite its appearance it puts in a performance that won’t disappoint.

It uses a dsPIC33 to read from half a dozen analog sensors on the bottom of the board. We’re not all that familiar with the chip’s features, but [Exapod] says it’s got an auto-scan feature he uses to read the sensors. This allows him to sample with 12-bit resolution from all six of them at about 30 kHz. No wonder the thing is so responsive in the demo video embedded below. The track he’s using is just some white printer paper with a fat circuit of black electrical tape placed in a somewhat squiggly pattern.

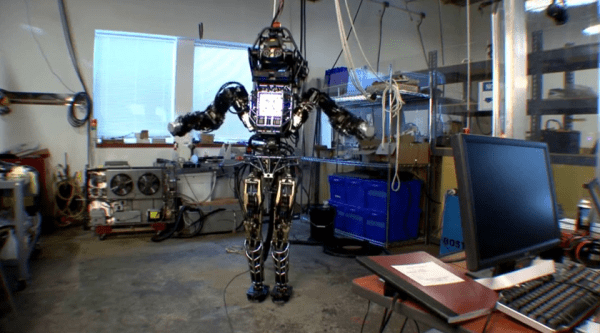

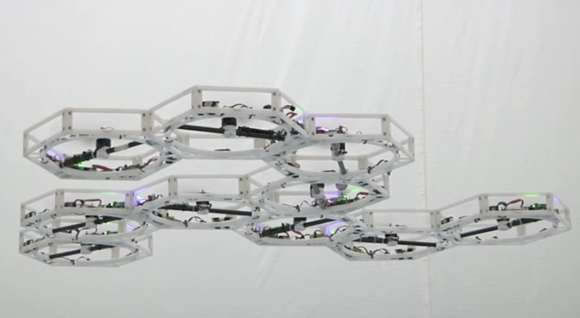

This is also a fun challenge with toys. Here’s one that hacks a hexapod to follow the lines.