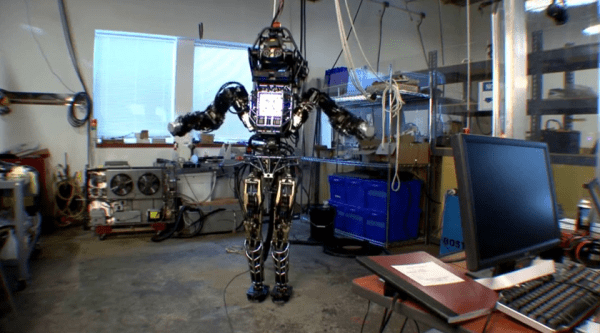

Boston Dynamics likes to show off… which is good because we like to see the scary looking robots they come up with. This is Atlas, it’s the culmination of their humanoid robotics research. As part of the unveiling video they include a development process montage which is quite enjoyable to view.

You should remember the feature in October which showed the Robot Ninja Warrior doing the Spider Climb. That was the prototype for Atlas. It was impressive then, but has come a long way since. Atlas is the object of affection for the Darpa Robotics Challenge which seeks to drop a humanoid robot into an environment designed for people and have it perform a gauntlet of tasks. Research teams participating in the challenge are tasked with teaching Atlas how to succeed. Development will happen on a virtual representation of the robot, but to win the challenge you have to succeed with the real deal at the end of the year.