The Mooltipass project USB code contributor [Tom] and his friend [Ignatius] recently launched their Indiegogo campaign: meet the 3D gesture controller uMotio (Indiegogo link). As [Tom] has been spending much of his personal time helping the Mooltipass community, we figured that a nice way to thank him would be to try making their great open project one step closer to a disseminated product.

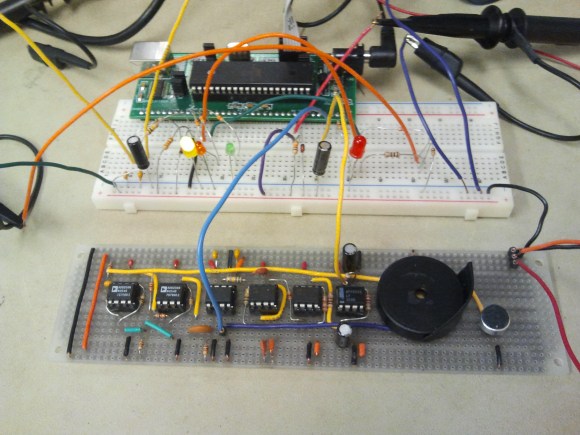

As you can see in the video embedded after the break, the uMotio is a plug and play system (detected as a USB HID joystick & keyboard with a CDC port) that can be used in many different scenarios: gaming, computer control, domotics, music, etc… The platform is based around an ATMega32u4 and the much discussed MGC3130 3D tracking and gesture controller. This allows a 0 to 15cm detection range with a resolution of up to 150dpi. uMotio is Arduino compatible so adapting it to your particular project can be done in no time especially using its dedicated expansion header and libraries. The uMotio blue even integrates an internal Li-ion battery and a Bluetooth Low Energy module.

Continue reading “UMotio: An Arduino Compatible 3D Gesture Controller”