What is it that’s not quite either a plane or a boat, but has characteristics of both? There are probably a lot of things that fit that description, but the one that [Nick Rehm] is working on is known as an ekranoplan. Specifically, he’s looking to make the surface-skimming ground-effect vehicle operate autonomously.

If you think you’ve heard about ekranoplans around here before, you’d be right — we’ve covered a cool LIDAR-controlled model ekranoplan that [rctestflight] worked on about a year ago, and more recently, [ThinkFlight]’s attempts to make an autonomous ekranoplan that can follow behind a boat. The latter is where [Nick] enters the collaboration, and the featherweight foam ground-effect vehicle shown in the video below is his test platform.

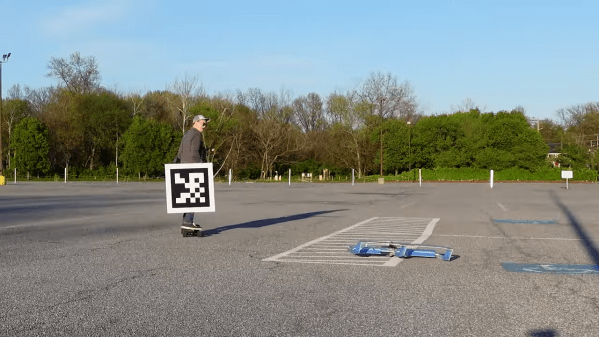

After sorting out the basic airframe design and getting the LIDAR integrated, he turned his attention to the autonomous bit, which relies on a Raspberry Pi 4 running ROS and a camera with a wide-angle lens. The Pi uses machine vision algorithms to find an “AprilTag” fiducial marker in the scene, which gives the flight controller information about the relative orientation of the ekranoplan to the tag. [Nick] tested tag tracking using an electric longboard, and the model ekranoplan did an admirable job of not only managing the ground-effect, but also staying on target right behind him. And hats off to [Nick] for keeping all the balls in the air and not breaking his neck in the process.

We’re looking forward to seeing what [Nick] built here end up in [ThinkFlight]’s big ekranoplan build. Ground-effect vehicles like these are undeniably cool, and it seems like they’ve got the potential to solve some interesting transportation problems.

Continue reading “This Machine-Vision Ekranoplan Might Just Follow You Home”