Every few years or so, a development in computing results in a sea change and a need for specialized workers to take advantage of the new technology. Whether that’s COBOL in the 60s and 70s, HTML in the 90s, or SQL in the past decade or so, there’s always something new to learn in the computing world. The introduction of graphics processing units (GPUs) for general-purpose computing is perhaps the most important recent development for computing, and if you want to develop some new Python skills to take advantage of the modern technology take a look at this introduction to CUDA which allows developers to use Nvidia GPUs for general-purpose computing.

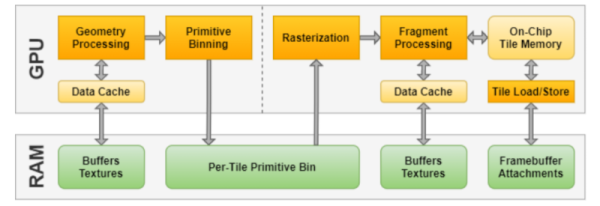

Of course CUDA is a proprietary platform and requires one of Nvidia’s supported graphics cards to run, but assuming that barrier to entry is met it’s not too much more effort to use it for non-graphics tasks. The guide takes a closer look at the open-source library PyTorch which allows a Python developer to quickly get up-to-speed with the features of CUDA that make it so appealing to researchers and developers in artificial intelligence, machine learning, big data, and other frontiers in computer science. The guide describes how threads are created, how they travel along within the GPU and work together with other threads, how memory can be managed both on the CPU and GPU, creating CUDA kernels, and managing everything else involved largely through the lens of Python.

Getting started with something like this is almost a requirement to stay relevant in the fast-paced realm of computer science, as machine learning has taken center stage with almost everything related to computers these days. It’s worth noting that strictly speaking, an Nvidia GPU is not required for GPU programming like this; AMD has a GPU computing platform called ROCm but despite it being open-source is still behind Nvidia in adoption rates and arguably in performance as well. Some other learning tools for GPU programming we’ve seen in the past include this puzzle-based tool which illustrates some of the specific problems GPUs excel at.