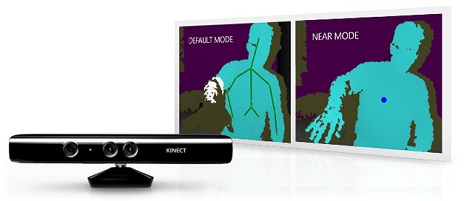

[Steven] needed to come up with a project for the Computer Vision course he was taking, so he decided to try building a portable 3D camera. His goal was to build a Kinect-like 3D scanner, though his solution is better suited for very detailed still scenes, while the Kinect performs shallow, less detailed scans of dynamic scenes.

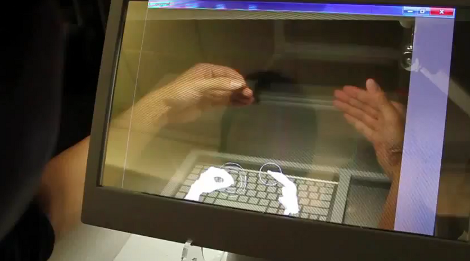

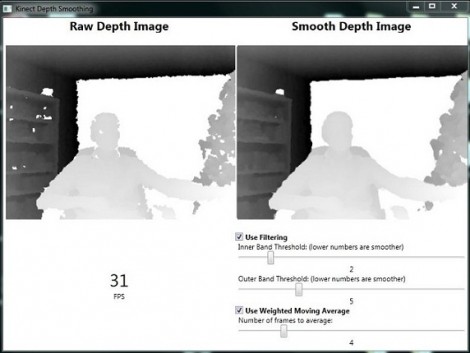

The device uses a TI DLP Pico projector for displaying the structured light patterns, while a cheap VGA camera is tasked with taking snapshots of the scene he is capturing. The data is fed into a Beagleboard, where OpenCV is used to create point clouds of the objects he is scanning. That data is then handed off to Meshlab, where the point clouds can be combined and tweaked to create the final 3D image.

As [Steven] points out, the resultant images are pretty impressive considering his rig is completely portable and that it only uses an HVGA projector with a VGA camera. He says that someone using higher resolution equipment would certainly be able to generate fantastically detailed 3D images with ease.

Be sure to check out his page for more details on the project, as well as links to the code he uses to put these images together.