If you watch the old original Star Trek, you’ll notice that the computers on board the Enterprise don’t look much like our computers (unless you count the little 3.5 inch floppies that looked pretty close to the real thing). Then again, the Enterprise didn’t need keyboards and screens since the computers did a pretty good job of listening and speaking to humans.

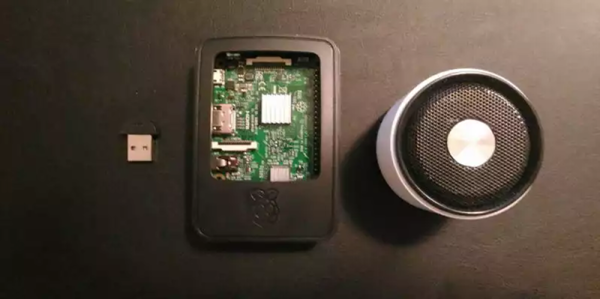

We aren’t quite to the point where you can just ask the computer some fuzzy open-ended question like Captain Kirk did, but we do have things like Echo, Siri, and Google Now that do a fair job of listening to you and replying. In fact, Google provides an API that can do speech recognition and generation. [Giulio] used some common Python libraries to add speech I/O to a Raspberry Pi.