For most of human history, the way to get custom shapes and colors onto one’s retinas was to draw it on a cave wall, or a piece of parchment, or on paper. Later on, we invented electronic displays and used them for everything from televisions to computers, even toying with displays that gave the illusion of a 3D shape existing in front of us. Yet what if one could just skip this surface and draw directly onto our retinas?

Admittedly, the thought of aiming lasers directly at the layer of cells at the back of our eyeballs — the delicate organs which allow us to see — likely does not give one the same response as you’d have when thinking of sitting in front of a 4K, 27″ gaming display to look at the same content. Yet effectively we’d have the same photons painting the same image on our retinas. And what if it could be an 8K display, cinema-sized. Or maybe have a HUD overlay instead, like in video games?

In many ways, this concept of virtual retinal displays as they are called is almost too much like science-fiction, and yet it’s been the subject of decades of research, with increasingly more sophisticated technologies making it closer to an every day reality. Will we be ditching our displays and TVs for this technology any time soon?

A Complex Solution to a Simple Question

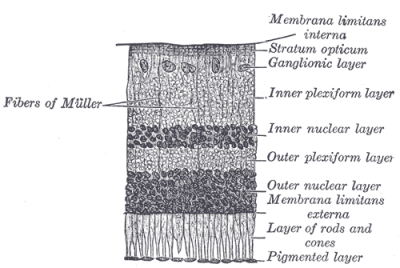

The Mark I human eye is a marvel produced through evolutionary processes over millions of years. Although missing a few bug fixes that were included in the cephalopod eye, it nevertheless packs a lot of advanced optics, a high-density array of photoreceptors, and super-efficient signal processing hardware. Before a single signal travels from the optic nerve to the brain’s visual cortex, the neural network inside the eye will have processed the incoming visual data to leave just the important bits that the visual cortex needs.

The Mark I human eye is a marvel produced through evolutionary processes over millions of years. Although missing a few bug fixes that were included in the cephalopod eye, it nevertheless packs a lot of advanced optics, a high-density array of photoreceptors, and super-efficient signal processing hardware. Before a single signal travels from the optic nerve to the brain’s visual cortex, the neural network inside the eye will have processed the incoming visual data to leave just the important bits that the visual cortex needs.

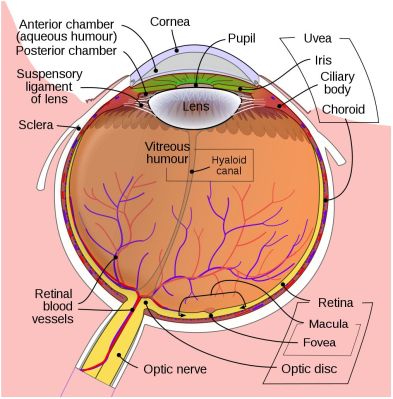

The basic function of the eye is to use its optics to keep the image of what is being looked at in focus. For this it uses a ring of smooth muscle called the ciliary muscle to change the shape of the lens, allowing the eye to change its focal distance, with the iris controlling the amount of light that enters the eye. This enables the eye to focus the incoming image onto the retina so that the area with the most photorecepters (the fovea centralis) is used for the most important thing in the scene (the focus), with the rest of the retina used for our peripheral vision.

The simple question when it comes to projecting an image onto the retina thus becomes: how to do this in a way that plays nicely with the existing optics and focusing algorithms of the eye?

Giving the Virtual a Real Place

In the naive and simplified model of virtual retinal display technology, three lasers (red, green and blue, for a full-color image) scan across the retina to allow the subject to perceive an image as if its photons came from a real life object. As we have however noted in the previous section, this is not what we’re working with in reality. We cannot directly scan across the retina, as the eye’s lens will diffract the light, a diffraction that changes as the eye adjusts its focal length.

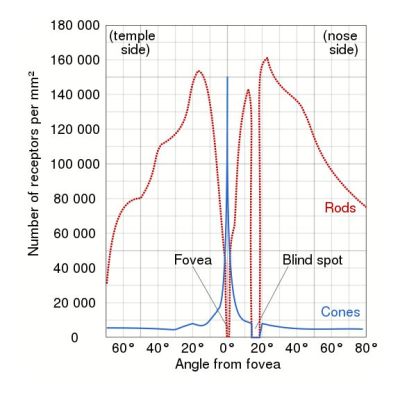

The only part of the retina that we’re interested in is also the fovea, as it is the only section of the retina where there is a dense cluster of cones (the photoreceptors capable of sensing the frequency of light, i.e. color). The rest of the retina is only used for peripheral vision, with mostly (black and white sensing) rods and very few cones. To get clearly identifiable images projected onto a retina, we have a 1.5 mm wide fovea, with the 0.35 mm in diameter foveola providing the best visual acuity.

The only part of the retina that we’re interested in is also the fovea, as it is the only section of the retina where there is a dense cluster of cones (the photoreceptors capable of sensing the frequency of light, i.e. color). The rest of the retina is only used for peripheral vision, with mostly (black and white sensing) rods and very few cones. To get clearly identifiable images projected onto a retina, we have a 1.5 mm wide fovea, with the 0.35 mm in diameter foveola providing the best visual acuity.

Hitting this part of the retina requires that the subject either consciously focuses on the projected image in order to perceive it clearly, or adjust for the focal distance of the eye at any given time. After all, to the eye all photons are assumed to come from a real-life object, with a specific location and distance. Any issues with this process can result in eyestrain, headaches and worse, as we have seen with tangentially related technologies such as 3D movies in cinemas as well as virtual reality systems.

Smart Glasses: Keeping Things Traditional

Most people are probably aware of head-mounted displays, also called ‘smart glasses’. What these do is create a similar effect to what can be accomplished with virtual retinal display technology, in that they display images in front of the subject’s eyes. This is used for applications like augmented (mixed) reality, where information and imagery can be super-imposed on a scene.

Google made a bit of a splash a few years back with their Google Glass smart glasses, which use special, half-silvered mirrors to guide the projected image into the subject’s eyes. Like the later Enterprise versions of Google Glass, Microsoft is targeting their HoloLens technology at the professional and education markets, using combiner lenses to project the image on the tinted visor, similarly to how head-up displays (HUDs) in airplanes work.

Magic Leap’s Magic Leap One uses waveguides that allow an image to be displayed in front of the eye, on different focal planes, akin to the technology used in third generation HUDs. Compared to the more futuristic looking HoloLens, these look more like welding goggles. Both the HoloLens and Magic Leap One are capable of full AR, whereas the Google Glass lends itself more as a basic HUD.

Although smart glasses have their uses, they’re definitely not very stealthy, nor are most of them suitable for outdoor use, especially during sunny weather and hot summer weather. It would be great if one could skip the cumbersome head strap and goggles or visor. This is where virtual retinal displays (VDRs) come into play.

Painting with Lasers and Tiny Mirrors

Naturally, the very first question that may come to one’s mind when hearing about VDRs is why it’s suddenly okay to shine not one but three lasers into your eyes? After all, we have been told to never, not even once, point even the equivalent of a low-powered laser pointer at a person, let alone straight at their eyes. Some may remember the 2014 incident at the Burning Man festival where festival goers practically destroyed the sight of a staff member with handheld lasers.

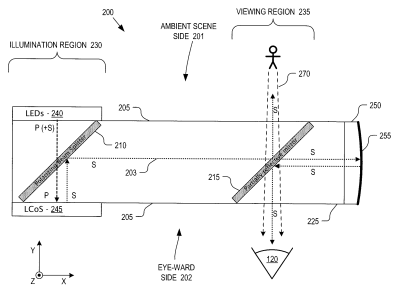

The answer to these concerns is that very low-powered lasers are used. Enough to draw the images, not enough to do more than cause the usual wear and tear from using one’s eyes to perceive the world around us. As the light is projected straight onto the retina, there is no image that can become washed out in bright sunlight. Companies like Bosch have prototypes of VRD glasses, with the latter recently showing off their BML500P Bosch Smartglasses Light Drive solution. They claim an optical output power of <15 µW.

Bosch’s solution uses RGB lasers with a MEMS mirror to direct the light into the subject’s pupil, and onto the retina. However, one big disadvantage of such a VRD solution is that it cannot just be picked up and used like one can with the previously mentioned smart glasses. As discussed earlier, VRDs need to precisely target the fovea, meaning that a VRD has to be adjusted to each individual user to work or else one will simply see nothing as the laser misses the target.

Much like the Google Glass solution, Bosch’s BML500P is mostly useful for HUD purposes, but over time this solution could be scaled up, with a higher resolution than the BML500P’s 150 line pairs and in a stereo version.

The Future is Bright

The cost of entry in the AR and smart glasses market at this point is still very steep. While Google Glass Enterprise 2 will set you back a measly $999 or so, HoloLens 2 costs $3,500 (and up), leading some to improvise their own solution using beam splitters dug out of a bargain bin at a local optics shop. Here too the warning of potentially damaging one’s eyes cannot be underestimated. Sending the full brightness of a small (pico)projector essentially straight into one’s eye can cause permanent damage and blindness.

There are also AR approaches that focus on specific applications, such as tabletop gaming with Tilt Five’s solution. Taken together, it appears that AR — whether using the beam splitter, projection or VRD approach — still is in a nascent phase. Much like virtual reality (VR) a few years ago, it will take more research and development to come up with something that checks all the boxes for being affordable, robust and reliable.

That said, there definitely is a lot of potential here and I, for one, am looking forward to seeing what comes out of this over the coming years.

I really hate it anytime some display maker uses phrases like “painting image directly on the retina”. They all boil down to a display emitting light and that light being focused by the lens of the eye, just like every other display.

It’s just not possible to create a virtual image from a point light source. You can’t scan a laser across the retina unless you physically move the location of the laser. The Bosch system sounds like a DLP display. It sounds very much like the Avegant Glyph, a display touted with much fanfare and “retinal imaging”, sending the image “directly onto the retina”. Which of course was just a laser shining on a DLP. Which ends up being very small and which you have to look at from a very narrow angle or see nothing.

I really hate it when a commenter spouts nonsense without doing even the most basic reading on what they purport to be an expert on.

The Bosch system doesn’t need to move the laser, and doesn’t need anything like a DLP: It scans the collimated beam across a holographic reflector element embedded in the eyeglass lens. That reflector functions like a curved mirror, presenting to the eye a virtual image located some comfortable distance in front of the lens.

It’s still a HUD-like display, and still relies on the device’s optical power to be at least comparable to the ambient light to be visible.

Correct and probably based on MicroVision’s (MVIS) technology and IP .. similar to what Microsoft is doing with Hololens.

A mirror array like DLP or galvonometer pairs (i’m sure there are other options too) does effectively move the light source so you can use them to scan a laser point across whatever you want to (which could be directly onto a retina). So it can be very accurately called painting an image on the retina – its not a full image/ object reflection being brought into focus by the eye. And in this case yes it still passes though the lens of the eye and this must be taken into account but you could theoretically continue to ‘paint’ in focus images no matter what the eye is focused on. So much as you hate it this is pretty accurate marketing speak – better than applied to many other products (at least when applied to projections straight into the eye – if you are seeing it on project to screen or normal displays then I agree with you).

As for the limitations of such technology currently I’d describe them as significant. But meaningful leaps could well be not that far into the future.

I’ll stick with ‘half’ silvered lenses and traditional screens myself at least for the foreseeable future. That is a safe, reasonably priced and ‘easy’ route to AR/HUD type things and having a fixed focal length to the projection isn’t the end of the world.

No, a DLP does not do any sort of “move the light source”. It just gates individual pixels on or off. The DLP array is a 1:1 map of the output pixels (except for the exotic fourier-based configurations).

Galvanometers, yes, they steer the beam and, yes, can correctly be said to be “painting the retina”.

And no, you can’t “continue to ‘paint’ in focus images no matter what the eye is focused on.” The lens of the eye is still in the optical path and still does the focusing on the retina. So the manufacturers of such systems must take into account myopia, presbyopia, etc., and even astigmatism (which AFAIK none do, not even Focals by North, but I’d be delighted to learn otherwise because then I’d be lining up for them.)

Indeed a DLP is an array of steerable mirrors in a grid of pixels but can be used like Galvo’s if you want to. All a galvo is is a mirror that moves! I’ve seen tests done with DLP chips that allow this kind of use case its just simple optics and choice of setup.

And I never meant to imply current devices can paint in focus images regardless of the eyes position and focus – just that it could theoretically be done if taking into account the eye’s current state – something the half silvered lens creating a focal length x systems can never do, the eye actively has to focus on the image plane for them.

Just for clarity that last sentence should be ‘half silvered lens REFLECTING A SCREEN creating …’

And I’m not suggesting a DLP implementation is a 100% true analog galvo either – its more like a good approximation – the idea behind the tests I saw was that DLP chips are damn nearly indestructible compared to traditional galvo’s – able to operate under more adverse conditions etc. But its still possible to use DLP chips as a core component for steering light beams which was my point. Though I admit I could have been clearer I thought it didn’t need further explanation as to me its a known technique.

A DLP isn’t anything like a galvanometer except in the grossest sense of being a pivoting mirror. A galvo steers a single large (few-millimeter scale) mirror very precisely over a continuous range of angles, usually (though not always) under servo control. A DLP is an array of many small (few-micron) mirrors that can have one of exactly two angles. It’s on or off, though PWM can give you an illusion of gray scale. But the mirrors can’t do arbitrary beam steering like a galvo.

You may have got the impression they are capable of beam steering from reports of the special case, where a DLP can be used as an array of switchable elements in Fourier optics, and can yield a set of discrete beam angles with the addition of the appropriate Fourier optical elements. They have their uses (e.g., tunable spatial filters), but are pretty awful at simple beam steering: poor contrast, high sidelobes & scatter, a finite (and small) number of discrete angles available, variation of angle with wavelength, etc.

You are quite correct Paul -The DLP test case I’ve seen used multiple DLP chips and optics to create a pretty impressive beam steer. Pretty sure it was using PWM and some rather cleaver optics as well as multiple chips in the path (I think the first chips off position fed one secondary DLP while its on fed another). But it works pretty well though obviously does not pass as much of the source light – tiny mirrors with only a percentage in use for each path.

It is discreet points but if you have enough points then it can have as much control as a servo driven galvo – how many steps does your motor have? The only Galvo options that don’t have discreet steps are the ones driven in analog fashion and those have other problems with drift and in practice still will be stepped based on how precise you can modulate the input (steps start getting mighty small if you put the effort in but still they exist).

The only Galvo options that don’t have discreet steps are the ones driven in analog fashion and those have other problems with drift …

I built a galvanometer-based optical system that adjusted and locked the wavelength of a tunable laser with a precision, accuracy and stability (drift) of a few kilohertz (around one part in 10^11) In 1986. All analog, except for the measurement instrument, which ran on a Commodore PET.

Analog is capable of astonishing precision if you do it right, and digital is often overrated.

http://doc-ok.org/?p=1386

Oliver Kreylos has an excellent write up on how painting an image this way on the retina is not possible with his doc-ok “Lasers Are Not Magic” post. You have to have the laser scan out onto the equivalent to a display that the eye then sees.

Kreylos’ post actually does describe exactly how to paint an image on the retina with a scanning laser; With the virtual retinal display, exactly what I describe above. What Kreylos does NOT describe is the significant challenge of getting a large field of view and large exit pupil at the same time from a small optical system — similar to the concept of étendue.

And unless the exit pupil is expanded you can end up with a system that does a very good job of imaging any “floaters” in your eye on to your retina. As I look around without any such display I am vaguely aware of very defocused objects floating around. Put on a retinal scanning display and I see well focused black objects floating around. These displays are not usable for me.

“Will we be ditching our displays and TVs for this technology any time soon?”

Skip step two, and go straight for the optic nerve. The ultimate V/A/R.

I don’t know if injecting into the optic nerve would be the right place, even if that is technically feasible compared to more convenient locations. As [Maya] mentions, there’s a heck of a lot of preprocessing that goes on right in the Mk. 1 eyeball retina before getting data fired down that fat pipe to the back of the head. The visual cortex there expects to receive that preprocessed compressed and formatted data — a bespoke format it learned over years when it was still plastic. Any synthetic visual information injected in that path would need to replicate that weird, organic preprocessing that’s likely unique to each Mk 1 eyeball.

It is unique. Every person has a different distribution of rods and cones.

So the question of “does red look red to you” has an answer: no. Everyone has a slightly different version of “red” because the color balance of their eyes varies. We’ve just learned to call it the same color.

Oddly, the eventual implants will synchronize our color understanding as our phones have synchronized our clocks…

I don’t like how this website’s background looks like fish smells

“Everyone has a slightly different version of “red” because the color balance of their eyes varies. We’ve just learned to call it the same color.”

I’ve debated with someone over this. Are you sure? How would one explain color blindness, then, as we classified that?

If you have different color filters (2 color 3d glasses work) try wearing them for a while, taking them off and looking at things with one eye only then the other to see the difference between perceiving the world with different color saturation levels.

How can you test that hypothesis? Can you test that? If yes I’d be super curious to know how and if it had been done before. Thank you!

Better to lay a stimulation grid on the retina itself. See what Paul says.

The stimulation grid could be used for calibrating the implant on the optic nerve.

All this seems to be happily neglecting the fact that the human eye rotates, so for the laser to project from a single point means that you can only see the display when you’re looking right at it, and therefore the apparent display size must be very small. As soon as your eye rotates to look at a different part of the projected image, the light will be blocked by the aperture of your iris, which also changes in size depending on the brightness.

The only way for it to work is to project the image first onto a larger screen or mirror in front of the eye, so the light can enter the eye no matter where you look – but that’s identical to normal VR goggles.

You don’t need a second screen — just the virtual image. As for accommodating eye rotation, the optical system must be engineered to place its exit pupil at the location of the eyeball. It’s not hard — every decent pair of binoculars, or microscope, or telescope does it, and the good ones do it very well, and can accommodate a large amount of eye rotation..

But, yes, you do need some kind of optical surface filling the visible area of the apparent screen — that’s the role of the holographic element in the eyeglasses.

>a large amount of eye rotation

I wouldn’t call staring into binoculars or a microscope “a large amount”. It’s a relatively narrow tube. For example, if I set my camera’s focal length to match my eye so I can look through the eyepiece and overlay the image on what I see with my other eye, the viewfinder covers an area roughly equivalent to a 31″ screen 6 ft away – or a visual angle of approximately 24 degrees.

It’s difficult to make an “optical surface” that covers more than 20-30 degrees without covering your eyes with goggles. Sure, you can bounce the laser off of the inside of a pair of glasses, but that’s not really what they mean in the article when they say “painting an image on your retina” because you’re projecting the image onto a “virtual” screen that covers most of your visual field and the eye then picks up whatever part of it you want to see.

This is opposed to the naive idea where you have a laser beam pointing into your eye and drawing only the portion of the image that you’re looking at. The reason for drawing exactly on the fovea is that you’d need to be projecting a 100+ gigapixel image if you want to match the resolving power of the human eye, so focusing on the fovea alone lets you get away with less bandwidth. However, it would look weird anyhow since you wouldn’t see the display in your peripheral vision but only a dinner-plate sized object in the middle hovering a few feet ahead.

100+ Megapixel, actually, but you get the point. If you’re placed in a projection bubble where you can turn your eyes freely but not your head, you can in theory see about 150 Mpix worth of detail and a little bit more if you focus both eyes on the same bit.

Covering only the fovea lets you get away with a surprisingly small number of pixels, probably no more than 1 Mpix because they’re all concetrated on such a small area. That’s why it’s so tempting – in theory you could make virtual reality goggles that look indistinguishable from reality and still use little enough bandwidth that you can stream the video over easily.

I know pupil tracking devices exist, wouldn’t they solve the 100+ megapixel problem? You could send only large scale changes of light intensity to most of the eye and high resolution to the fovea.

So, how do you propose keeping up with the saccades?

Seems a lot easier to just provide a virtual image. It’s not like the optics are going to be any simpler or smaller trying to keep up with the motion of the fovea.

The trick is how to provide the high resolution across the entire virtual image all the time, and the power to render it all.

Eye tracking and lower resolution rendering is already done with regular VR goggles, but that’s done to reduce the workload on the CPU – the display is still full-res all the way. It’s also laggy: they have to predict where you’re going to look to keep up, and it doesn’t always work.

Hi Paul,

I would really love to discuss some more about AR technologies and the considerations for the eyes and the brain with retinal projection. We are a startup developing AR glasses specifically to help people affected by aged macular degeneration see better.

My email is michael.perreault@eyful.com.

Truly,

Michael Perreault

What was that warning.. Do not look into laser with remaining eye…

Can I get those Magic Leap One goggles with prescription impact-resistant, UV-filtering lenses? If so, they could make good daily drivers.

I have the avegant glyph headset. While the pic us decent what bugs me is the field of view doesn’t surpass your forward visions peripherals so it’s kinda like sitting in the back of a movie theatre. It’s cool for playing PlayStation or watch in a movie but the image is boxed by surrounding blackness that kinda prevents the image from achieving any “immersive” qualities that at or decent be headsets possess. On to ostracism and Paul’s comment if you do a little digging you can find projects in the web where they’ve interfaced video feeds form a camera to a blind person’s optic nerve. It restores sight not to original quality but gives sight back to blind people with some limitations, in on article I read half of the people did not like it and chose to continue living blind because they felt that after so long without vision the video feed was more disruptive and they had grown used to being blind for long enough that they felt the limited restored sight from the cam was more disruptive than helpful, others did manage to g e t used to it and I guess are living with restored grainy webcam vision now after being blinded. Another article I read with a different group of researchers found another surprising aspect. Those who had gone blind were able to adopt the visual implant signals and use the video feed, however peoples that were born profoundly blind were unable to get the signal or what they did get from the video feed they found to be incredibly unpleasant and didn’t recognize anything visually, they came to conclude that if the visual processing regions of the brain aren’t developed when a person is born those neural pathways kinda lie unused and untapped in your brain and when the video feed is connected the brain either shuts it out totally or has an adverse reaction to the stimuli because the mind has developed thru the person’s life without assigning meaning to things like shapes colors light shadow and depth so the introduction of a new sense simply would not be able to be interpreted by the brain cuz the neural links never developed. Kinda like inputting a hdmi cable signal into a db25 plug, there’s no way it’ll process the signal without understanding the signal lines and clock and no drivers or processors. Finally I saw another video it may have been on vices motherboard channel where they figured out a way around this problem and it was more of an ethical debate on if the tech should remain only to restore vision or if it could enhance vision like giving someone if or it vision or if possible even 360degree vision in the future, I’m sure some special forces vets with security clearances are gonna end up bein the guinea pigs for this type of tech in the not too distant future. Anyways I guess the point I was trying to illustrate is we’re just dipping our toes into feeding images straight to the eyes or straight to the brain and were still a long way to go until the tech is mature, but we are on our way.

https://www.technologyreview.com/2020/02/06/844908/a-new-implant-for-blind-people-jacks-directly-into-the-brain/

The thing is, the brain has to understand what the “eye” is seeing.

If the optic nerve isn’t damaged, and the signals a healthy retina is receiving could be mapped/decoded, then

instead of using the damaged retina itself, use the optic nerve. I did see a TED talk about this once.

I wish I could find the URL to watch it again, the only thing I remember is a picture of a baby and a computer

decoded optic nerve impulse of the same picture.

Sounds like Sheila Nirenberg’s talk, from 2011. It’s been a long time. I wonder what’s happened since then?

…”optical output power of <15 µW."… Can't wait for a cheap Chinese knock-off.

You are correct. Thank you. Here is the URL.

https://youtu.be/qQcrXOhdLvw

IIRC, in Snow Crash, many characters (including Hiro Protagonist the … well … hero protagonist) used laser-projected wearable displays. But the laser wasn’t projecting onto the retina. The user wore a pair of bug-eyed goggles (circular dome shape) over each eye and a small, laser projector, somewhere NOT on their face, fired onto the bug-eyed lenses. Since the eyes are behind those domes, the entire field of view was filled by imagery. And since the goggles themselves were very lightweight, they didn’t tire of wearing them.

Naturally, if you were do something like that in The Real World, you’d need some kind of registration markings around the rims of the lenses such that the projector, with some kind of camera facing you, could track precise head movements and move the projected image AND the viewpoint as needed to accommodate which way you were looking.

In the book, IIRC, Hiro rode a motorcycle, with the projector on the bike, facing him, while wearing the glasses and operating in both The Real World and the Metaverse, simultaneously.

Projecting an image onto a half of a pingpong ball over your eye doesn’t allow you to focus the image.

The Guyton eye chart is an attachment to an ophthalmic slit lamp, first described in 1983. It’s used, very uncommonly, for measuring retinal acuity in patients with cataracts, to verify retinal function before removing the opacity. There was also a holographic version that could get past denser cataracts. https://www.ncbi.nlm.nih.gov/pubmed/6664676