Stop motion animation is notoriously difficult to pull off well, in large part because it’s a mind-numbingly slow process. Each frame in the final video is a separate photograph, and for each one of those, the characters and props need to be moved the appropriate amount so that the final result looks smooth. You don’t even want to know how long Ben Wyatt spent working on Requiem for a Tuesday, though to be fair, it might still get done before the next Avatar.

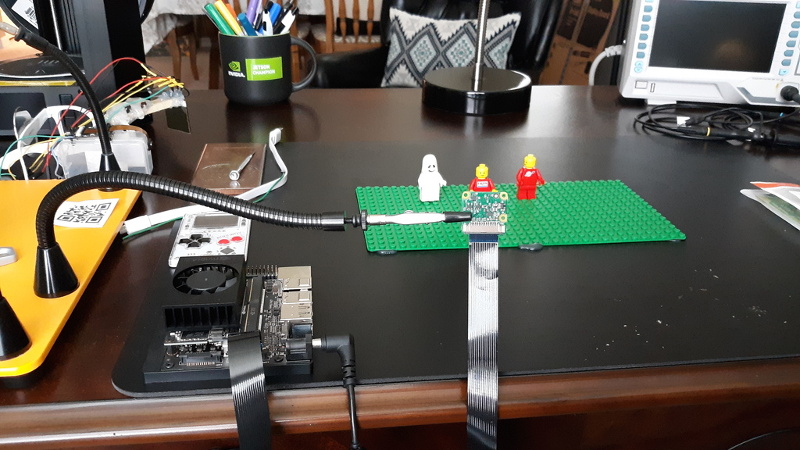

But [Nick Bild] thinks his latest project might be able to improve on the classic technique with a dash of artificial intelligence provided by a Jetson Xavier NX. Basically, the Jetson watches the live feed from the camera, and using a hand pose detection model, waits until there’s no human hand in the frame. Once the coast is clear, it takes a shot and then goes back to waiting for the next hands-free opportunity. With the photographs being taken automatically, you’re free to focus on getting your characters moving around in a convincing way.

If it’s still not clicking for you, check out the video below. [Nick] first shows the raw unedited video, which primarily consists of him moving three LEGO figures around, and then the final product produced by his system. All the images of him fiddling with the scene have been automatically trimmed, leaving behind a short animated clip of the characters moving on their own.

If it’s still not clicking for you, check out the video below. [Nick] first shows the raw unedited video, which primarily consists of him moving three LEGO figures around, and then the final product produced by his system. All the images of him fiddling with the scene have been automatically trimmed, leaving behind a short animated clip of the characters moving on their own.

Now don’t be fooled, it’s still going to take awhile. By our count, it took two solid minutes of moving around Minifigs to produce just a few seconds of animation. So while we can say its a quicker pace than with traditional stop motion production, it certainly isn’t fast.

Machine learning isn’t the only modern technology that can simplify stop motion production. We’ve seen a few examples of using 3D printed objects instead of manually-adjusted figures. It still takes a long time to print, and of course it eats up a ton of filament, but the mechanical precision of the printed scenes makes for a very clean final result.

My first thought was “Cool! ”

Followed by “I don’t have a Jetson….”

Maybe something similar could be done with frame by frame capture of the webcam feed, comparison between frames, and detection of hand colour or glove colour using changes in image statistics.

The concept at least can be done by any computer with enough horsepower to process the images, which is almost any computer these days.

The “AI” part is a good bit of initial training time, but it sounds like that has already be done for this creator – using hand pose detection model, so just find yourself a training set you like, and you could probably then run the “AI” on a Pi well enough for stop motion work if you really wanted to – but more computing power gives higher frame rate through the hand detection so your image is taken as soon as the hands are out of frame every time. Though that sounds like a bad thing to me – might still be casting shadow so the frame to keep should be say half a second after no hands detected…

Doesn’t seem like there’s anything here that would stop you from using this on any CUDA platform, I.E. a computer with a relatively recent NVIDIA GPU.

Performance wise I don’t think any of the Jetsons are more powerful than something like the GTX1080, but considering the cost of getting a video card these days, they provide a cheaper, low-power, option.

The arduboy on the table!

My daughter had a flipcard movie maker program on one of her handhelds (DS2?).

It would take a sequence of hand drawn pictures and display rapidly in sequence.

I never was able to get her to understand that each picture must only change a little bit from the previous one.

So, her “movies” were a sequential barrage of random pictures.

Check Andymation at https://www.youtube.com/user/andymation/videos

Couldn’t you just get another guy to snap the pictures?

Or have a remote, even.

Next up, have the AI look at adjacent frames and using blob detection and path planning, extrapolate what the intermediate frame should be. Then you could have temporal smoothing in the stop motion and you wouldn’t have to make ultra fine movements unless necessary and the animation would seem more fluid. Perhaps the interpolation routine could be rerun using it’s own frames and over several iterations add multiple frames between user manipulated frames.

Now that is a good idea.

I enjoy Parks and Recreation and your deep-cut reference to such. That is all.

I do not not see much improvement over a pedal or voice-activated setup. And I see a potential problem – lighting. The hands might leave the frame, but they often still affect the image, blocking light, which might be difficult for the AI to differentiate from planned lighting changes.

didn’t kubo use 3d printing to do their stop motion?