In a double-blast from the past, [Ian Failes]’ 2018 interview with [Phil Tippett] and others who worked on Jurassic Park is a great look at how the dinosaurs in this 1993 blockbuster movie came to be. Originally conceived as stop-motion animatronics with some motion blurring applied using a method called go-motion, a large team of puppeteers was actively working to make turning the book into a movie when [Steven Spielberg] decided to go in a different direction after seeing a computer-generated Tyrannosaurus rex test made by Industrial Light and Magic (ILM).

Naturally, this left [Phil Tippett] and his crew rather flabbergasted, leading to a range of puppeteering-related extinction jokes. Of course, it was the early 90s, with computer-generated imagery (CGI) animators being still very scarce. This led to an interesting hybrid solution where [Tippett]’s team were put in charge of the dinosaur motion using a custom gadget called the Dinosaur Input Device (DID). This effectively was like a stop-motion puppet, but tricked out with motion capture sensors.

This way the puppeteers could provide motion data for the CG dinosaur using their stop-motion skills, albeit with the computer handling a lot of interpolation. Meanwhile ILM could handle the integration and sprucing up of the final result using their existing pool of artists. As a bridge between the old and new, DIDs provided the means for both puppeteers and CGI artists to cooperate, creating the first major CGI production that holds up to today.

Even if DIDs went the way of the non-avian dinosaurs, their legacy will forever leave their dino-sized footprints on the movie industry.

Thanks to [Aaron] for the tip.

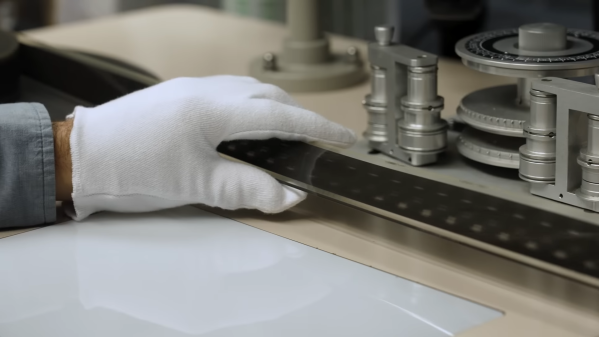

Top image: Raptor DID. Photo by Matt Mechtley.