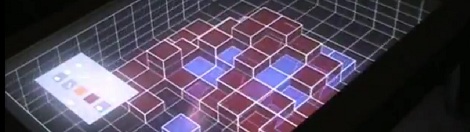

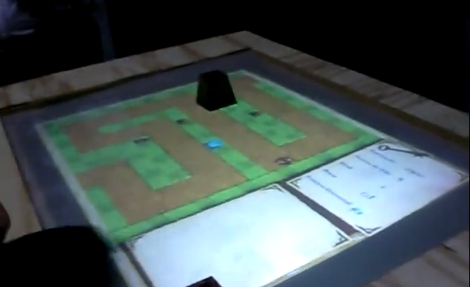

[Bastian] sent in a coffee table he built. This isn’t a place to set your drinks and copies of Make, though: it’s a multitouch table with a 3D display. Since no description can do this table justice, take a look at the video.

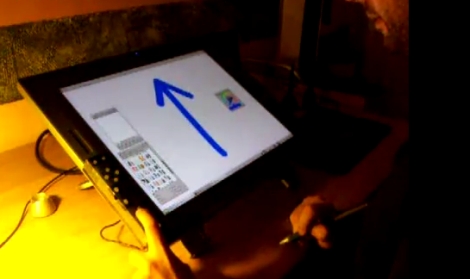

The build was inspired by the subject of this Hackaday post where [programming4fun] was able to build a ‘holographic display’ using a regular 2D projector and a Kinect. Both builds work on the principle of redrawing the 3D space in relation to the user’s head – as [Bastian] moves his head around the coffee table, the Kinect tracks his location and moves the 3 dimensional grid of boxes in the opposite direction. It’s extremely clever, and looks to be a promising user interface.

In addition to a Kinect, the coffee table uses a Microsoft Surface-like display; four infrared lasers are placed at the corner and detected with a camera next to the projector in the base.

After the break you can see the demo video and a gallery of the images [Bastion] put up on the NUI group forum.

Continue reading “Multitouch Table Uses A Kinect For A 3D Display”