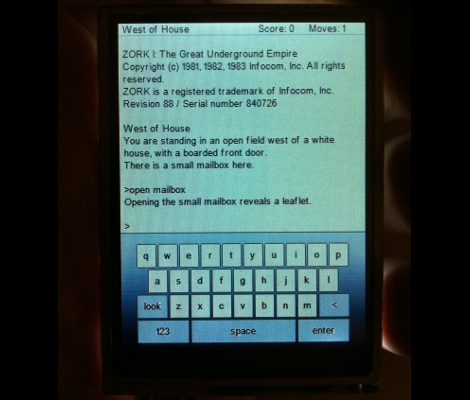

[Jane] wrote in to let us know about the touch-based synthesizer she and her classmates just built. They call it the ToneMatrix Touch, as it was inspired by a flash application called ToneMatrix. We’re familiar with that application as it’s been the inspiration for other physical builds as well.

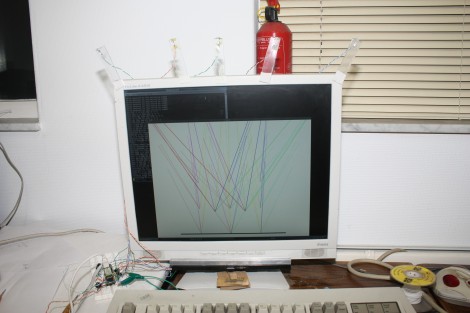

A resistive touch screen in the surface glass of the device provides the ability to interact by tapping the cells you wish to turn on or off. Below the glass is a grid of LEDs which represent sound bits in the looping synthesizer track. Fifteen shift registers drive the LED matrix, with the entire system controlled by an ATmega644 microcontroller. Although the control scheme is very straight forward, the jumper wires used to connect the matrix to the shift registers make for a ratsnest of wireporn that has been hidden away inside the case. Check out the demonstration video after the break to see what this looks like and sounds like when in use.

Continue reading “Touch-based Synthesizer Is A Wiring Nightmare”