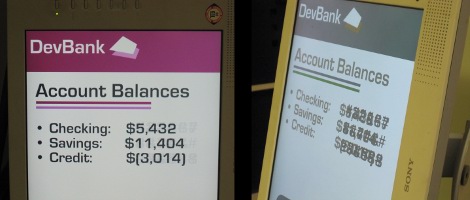

This wire-frame cube appears to be floating in mid-air because it actually is. This is a project which [Tom] calls a Laminar Flow Fog Screen. He built a device that puts out a faint amount of fog, which the intense light from a projector is able to illuminate. The real trick here is to get a uniformed fog wall, which is where the laminar part comes in. Laminar Flow is a phenomenon where fluids flow in a perfectly parallel stream, not allowing errant portions to introduce turbulence. This is a favorite trick with water.

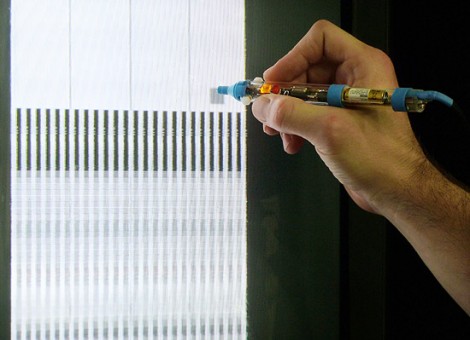

[Tom’s] fog screen starts off with a PC fan to move the air. This airflow is smoothed and guided by a combination of a sponge, and multiple drinking straws. This apparatus is responsible for establishing the laminar flow, as the air picks up fog from an ultrasonic fogger along the way.

The only real problem here is that you want the projector shooting off into infinity. Otherwise, the projection goes right through the fog and displays on the wall, ruining the effect. Outdoor applications are great for this, as long as there’s no air movement to mess with your carefully established fog screen.

You can find a short test clip embedded after the break but there are other videos at the link above.

Continue reading “Ghostly Images Appear Thanks To Projections On Fog”