How does one go about programming a drone to fly itself through the real world to a location without crashing into something? This is a tough problem, made even tougher if you’re pushing speeds higher and high. But any article with “MIT” implies the problems being engineered are not trivial.

The folks over at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have put their considerable skill set to work in tackling this problem. And what they’ve come up with is (not surprisingly) quite clever: they’re embracing uncertainty.

Why Is Autonomous Navigation So Hard?

Suppose we task ourselves with building a robot that can insert a key into the ignition switch of a motor vehicle and start the engine, and could do so in roughly the same time-frame that a human could do — let’s say 10 seconds. It may not be an easy robot to create, but we can all agree that it is very doable. With foreknowledge of the coordinate information of the vehicle’s ignition switch relative to our robotic arm, we can place the key in the switch with 100% accuracy. But what if we wanted our robot to succeed in any car with a standard ignition switch?

Now the location of the ignition switch will vary slightly (and not so slightly) for each model of car. That means we’re going to have to deal with this in real time and develop our coordinate system on the fly. This would not be too much of an issue if we could slow down a little. But keeping the process limited to 10 seconds is extremely difficult, perhaps impossible. At some point, the amount of environment information and computation becomes so large that the task becomes digitally unwieldy.

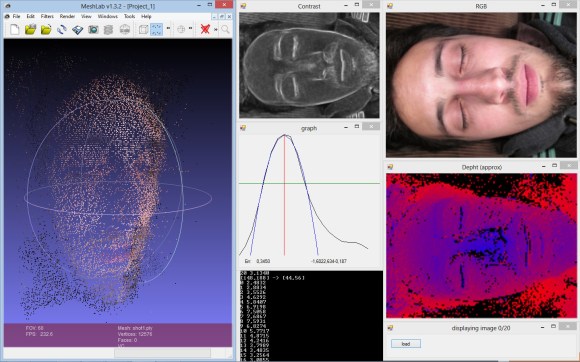

This problem is analogous to autonomous navigation. The environment is always changing, so we need sensors to constantly monitor the state of the drone and its immediate surroundings. If the obstacles become too great, it creates another problem that lies in computational abilities… there is just too much information to process. The only solution is to slow the drone down. NanoMap is a new modeling method that breaks the artificial speed limit normally imposed with on-the-fly environment mapping.

Continue reading “MIT Breaks Autonomous Drone Speed Limits By Not Sweating Obstacles”