Ever look out at a pond, stream, or river, and wonder how deep it is? For large bodies of water that are considered navigable, it’s easy enough to pull up a chart and find out. But what if there’s no public data for the area you’re interested in?

Well, you could spend all day on a little boat taking depth readings and making your own chart, but if you’re anything like [Clay] you could build a solar-powered autonomous robot to do it for you. He’s been working on the boat, which he calls Gumption Trap, for the better part of a year now. If we had to guess, we’d say the experience of designing and building it has ended up being a bit more interesting to him than the actual depth of the water — but that’s fine by us.

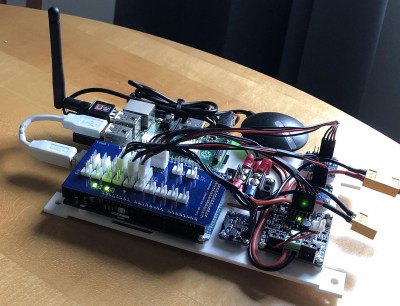

The design of the boat is surprisingly economical, as far as marine designs go. Two capped four-inch PVC pipes are used as pontoons, and 3D printed brackets attach those to an aluminum extrusion frame that holds the electronics and solar panel high above the water. This arrangement provides an exceptionally stable platform that would be all but impossible to flip under normal circumstances.

The design of the boat is surprisingly economical, as far as marine designs go. Two capped four-inch PVC pipes are used as pontoons, and 3D printed brackets attach those to an aluminum extrusion frame that holds the electronics and solar panel high above the water. This arrangement provides an exceptionally stable platform that would be all but impossible to flip under normal circumstances.

Around the back of the craft, there’s a pair of massive 3D printed thrusters, complete with some remarkably chunky printed propellers. The lack of rudders keeps things simple, with differential thrust between the two motors enough to keep the Gumption pointed in the right direction.

Continue reading “Mapping The Depths With An Autonomous Solar Boat”