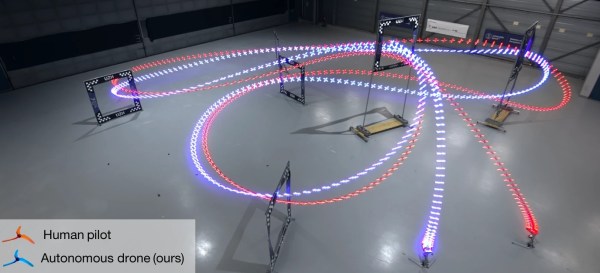

Even with all the technological advancements in recent years, autonomous systems have never been able to keep up with top-level human racing drone pilots. However, it looks like that gap has been closed with Swift – an autonomous system developed by the University of Zurich’s Robotics and Perception Group.

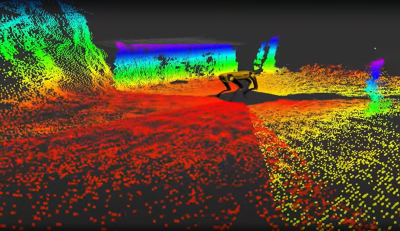

Previous research projects have come close, but they relied on optical motion capture settings in a tightly controlled environment. In contrast, Swift is completely independent of remote inputs and utilizes only an onboard computer, IMU, and camera for real-time for navigation and control. It does however require a pretrained machine learning model for the specific track, which maps the drone’s estimated position/velocity/orientation directly to control inputs. The details of how the system works is well explained in the video after the break.

The paper linked above contains a few more interesting details. Swift was able to win 60% of the time, and it’s lap times were significantly more consistent than those of the human pilots. While human pilots were often faster on certain sections of the course, Swift was faster overall. It picked more efficient trajectories over multiple gates, where the human pilots seemed to plan one gate in advance at most. On the other hand human pilots could recover quickly from a minor crash, where Swift did not include crash recovery.

The final results are impressive, especially given that all the processing and sensing comes from the drone. However, it still requires a well mapped track, so a human pilot should still come out on top given limited information about a new track. It would also be interesting to see how it handles large courses with gates that are much further apart.

Continue reading “Autonomous Racing Drones Are Starting To Beat Human Pilots”