Even though giant multouch display tables have been around for a few years now we have yet to see them being used in the wild. While the barrier to entry for a Microsoft Surface is very high, one of the biggest problems in implementing a touch table is one of interaction; how exactly should the display interpret multiple commands from multiple users? [Stephan], [Christian], and [Patrick] came up with an interesting solution to sorting out who is touching where by having a computer look at shoes.

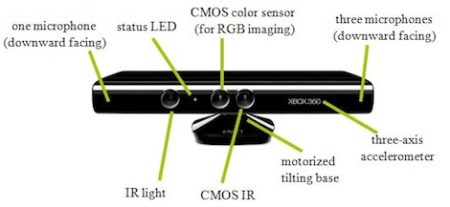

The system uses a Kinect mounted on the edge of a table to extract users from the depth images. From there, interaction on the display can be pinned to a specific user based on hand and arm orientation. As an added bonus the computer can also recognize users from their shoes. If a user is wearing a pair of shoes the computer recognizes, they’ll just walk up to the table and the software will recognize them.