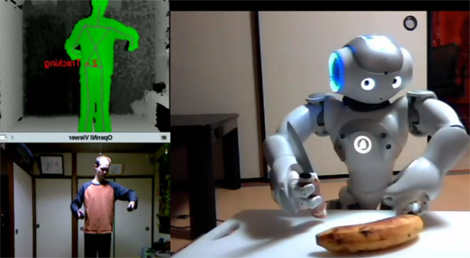

There never seems to be a lull in the stream of new and novel hacks that people create around Microsoft’s Kinect. One of the more recent uses for the device comes from [Interactive Fabrication] and allows you to fabricate yourself, in a manner of speaking.

The process uses the Kinect to create a 3D model of a person, which is then displayed on a computer monitor. Once you have selected your preferred pose, a model of the image is rendered by a 3D plastic printer. Each scan results in a 3cm x 3cm plastic model complete with snap together dovetail joints allowing the models to be combined together. A full body scan can be constructed with three of these tiles, resulting in a neat “Han Solo trapped in Carbonite” effect.

Currently only about 1/3 of Kinect’s full resolution is being used to create these models, which is pretty promising news to those who would try this at home. Theoretically, you should be able to create larger, more detailed images of yourself provided you have a 3D printer at your disposal.

Keep reading for a quick video presentation of the fabrication process.