[Florian] wants to browse the web like an internet cowboy from a cyberpunk novel. Unfortunately, VR controllers are great for games but really incapacitate a hand for typing. A new input method was needed, one that would free his fingers for typing, but still give his hands detailed input into the virtual world.

Since VR goggles have… hopefully… already reached peak ridiculousness, his first idea was to glue a Leap Motion controller to the front of it. It couldn’t look any sillier after all. The Leap controller was designed to track hands, and when combined with the IMU built into the VR contraption, did a pretty good job of putting his hands into the world. Unfortunately, the primary gesture used for a “click” was only registering 80% of the time.

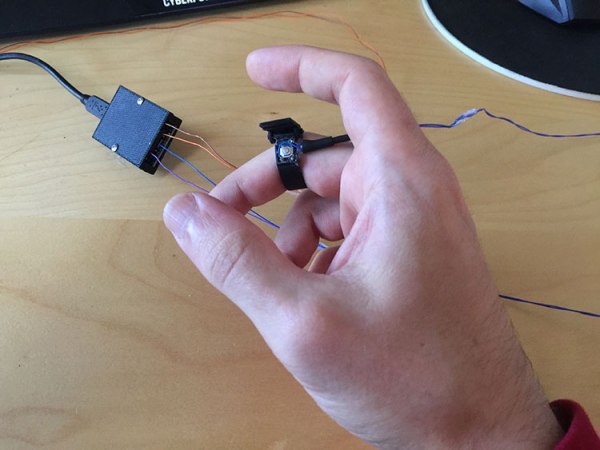

The gesture in question is a pinching motion, pushing the thumb and middle finger together. He couldn’t involve a big button without incapacitating his hands for typing. It took a few iterations, but he arrived at a compact ring design with a momentary switch on it. This is connected to an Arduino on his wrist, but was out of the way enough to allow him to type.

It’s yet another development marching us to usable VR. We personally can’t wait until we can use some technology straight out of Stephenson or Gibson novel.