We occasionally make fun of new security vulnerabilities that have a catchy name and shiny website. We’re breaking new ground here, though, in covering a shiny website that makes fun of itself. So first off, this is a real vulnerability in Apple’s brand-new M1 chip. It’s got CVE-2021-30747, and in some very limited cases, it could be used for something malicious. The full name is M1ssing Register Access Controls Leak EL0 State, or M1RACLES. To translate that trying-too-hard-to-be-clever name to English, a CPU register is left open to read/write access from unprivileged userspace. It happens to be a two-bit register that doesn’t have a documented purpose, so it’s perfect for smuggling data between processes.

Do note that this is an undocumented register. If it turns out that it actually does something important, this vulnerability could get more serious in a hurry. Until then, thinking of it as a two-bit vulnerability seems accurate. For now, however, the most we have to worry about is that two processes can use this to pass information back and forth. This isn’t like Spectre or Rowhammer where one process is reading or writing to an unrelated process, but both of them have to be in on the game.

Do note that this is an undocumented register. If it turns out that it actually does something important, this vulnerability could get more serious in a hurry. Until then, thinking of it as a two-bit vulnerability seems accurate. For now, however, the most we have to worry about is that two processes can use this to pass information back and forth. This isn’t like Spectre or Rowhammer where one process is reading or writing to an unrelated process, but both of them have to be in on the game.

The discoverer, [Hector Martin], points out one example where this could actually be abused: to bypass permissions on iOS devices. It’s a clever scenario. Third party keyboards have always been just a little worrying, because they run code that can see everything you type, passwords included. The long-standing advice has been to never use such a keyboard, if it asks for network access permissions. Apple has made this advice into a platform rule — no iOS keyboards get network access. What if a device had a second malicious app installed, that did have Internet access permissions? With a covert data channel, the keyboard could shuffle keystrokes off to its sister app, and get your secrets off the device.

So how much should you care about CVE-2021-30747? Probably not much. The shiny site is really a social experiment to see how many of us would write up the vulnerability without being in on the joke. Why go to the hassle? Apparently it was all an excuse to make this video, featuring the appropriate Bad Apple!! music video.

Half-Double’ing Down on Rowhammer

A few days ago, Google announced the details of Half-Double, and the glass is definitely Half-Double full with all the silly puns that come to mind. The concept is simple: If Rowhammer works because individual rows of ram are so physically close together, does further miniaturization enable attacks against bits two rows away? The answer is a qualified yes.

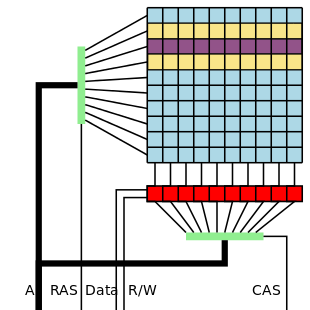

Quick refresher, Rowhammer is an attack first demonstrated against DDR3 back in 2014, where rapid access to one row of memory can cause bit-flip errors in the neighboring row. Since then, there have been efforts by chip manufacturers to harden against Rowhammer, including detection techniques. At the same time, researchers have kept advancing the art through techniques like Double-Sided Rowhammer, randomizing the order of reads, and attempts to synchronize the attack with the ram’s refresh intervals. Half-Double is yet another way to overcome the protections built into modern ram chips.

We start by specifying a particular ram row as the victim (V). The row right beside it will be the near aggressor row (N), and the next row over we call the far aggressor row (F). A normal Rowhammer attack would simply alternate between reading from the near aggressor and a far-off decoy, rapidly toggling the row select line, which degrades the physical charge in neighboring bits. The Half-Double attack instead alternates between the far aggressor and a decoy row for 1000 cycles, and then reads from the near aggressor once. This process is repeated until the victim row has a bit flip, which often happens within a few dozen iterations. Because the hammering isn’t right beside the victim row, the built-in detection applies mitigations to the wrong row, allowing the attack to succeed in spite of the mitigations.

More Vulnerable Windows Servers

We talked about CVE-2021-31166 two weeks ago, a wormable flaw in Windows’ http.sys driver. [Jim DeVries] started wondering something as soon as he heard about the CVE. Was Windows Remote Management, running on port 5985, also vulnerable? Nobody seemed to know, so he took matters into hiis own hands, and confirmed that yes, WinRM is also vulnerable to this flaw. From what I can tell, this is installed and enabled by default on every modern Windows server.

I finally found time to answer my own question. WinRM *IS* vulnerable. This really expands the number of vulnerable systems, although no one would intentionally put that service on the internet.

— Jim DeVries (@JimDinMN) May 19, 2021

And far from his optimistic assertion that surely no-one would expose that to the Internet… It’s estimated that there over 2 million IPs doing just that.

More Ransomware

On the ransomware front, there is an interesting story out of The Republic of Ireland. The health system there was hit by Conti ransomware, and the price for decryption set at the equivalent of $20 million. It came as a surprise, then, when a decryptor was freely published. There seems to be an ongoing theme in ransomware, that the larger groups are trying to manage how much attention they draw. On the other hand, this ransomware attack includes a threat to release private information, and the Conti group is still trying to extort money to prevent it. It’s an odd situation, to be sure.

Inside Baseball for Security News

I found a series of stories and tweets rather interesting, starting with the May Android updates at the beginning of the month. [Liam Tung] at ZDNet does a good job laying out the basics. First, when Google announced the May Android updates, they pointed out four vulnerabilities as possibly being actively exploited. Dan Goodin over at Ars Technica took umbrage with the imprecise language, calling the announcement “vague to the point of being meaningless”.

Shane Huntley jumped into the fray on Twitter, and hinted at the backstory behind the vague warning. There are two possibilities that really make sense here. The first is that exploits have been found for sale somewhere, like a hacker forum. It’s not always obvious if an exploit has indeed been sold to someone using it. The other possibility given is that when Google was notified about the active exploit, there was a requirement that certain details not be shared publicly. So next time you see a big organization like Google hedge their language in an obvious and seemingly unhelpful way, it’s possible that there’s some interesting situation driving that language. Time will tell.

The Patch Gap

The term has been around since at least 2005, but it seems like we’re hearing more and more about patch gap problems. The exact definition varies, depending on who is using the term, and what product they are selling. A good working definition is the time between a vulnerability being public knowledge and an update being available to fix the vulnerability.

There are more common reasons for patch gaps, like vulnerabilities getting dropped online without any coordinated disclosure. Another, more interesting cause is when an upstream problem gets fixed and publicly announced, and it takes time to get the fix pulled in. The example in question this week is Safari, and a fix in upstream WebKit. The bug in the new AudioWorklets feature is a type confusion that provides an easy way to do audio processing in a background thread. When initializing a new worker thread, the programmer can use their own constructor to build the thread object. The function that kicks off execution doesn’t actually check that it’s been given a proper object type, and the object gets cast to the right type. Code is executed as if it was correct, usually leading to a crash.

The bug was fixed upstream shortly after a Safari update was shipped. It’s thought that Apple ran with the understanding that this couldn’t be used for an actual RCE, and therefore hadn’t issued a security update to fix it. The problem there is that it is exploitable, and a PoC exploit has been available for a week. As is often the case, this vulnerability would need to be combined with at least one more exploit to overcome the security hardening and sandboxing built into modern browsers.

There’s one more quirk that makes this bug extra dangerous, though. On iOS devices, when you download a different browser, you’re essentially running Safari with a different skin pasted on top. As far as I know, there is no way to mitigate against this bug on an iOS device. Maybe be extra careful about what websites you visit for a few days, until this get fixed.