With the lessons learned from the Egyptian, Libyan, and Syrian revolutions, a few hardware and software hackers over at Lulzlabs have taken it upon themselves to create a free-as-in-beer and free-as-in-speech digital communications protocol that doesn’t deal with expensive, highly-surveilled commercial and government controlled infrastructure. They call it Airchat, and it’s an impressive piece of work if you don’t care about silly things like ‘laws’.

Before going any further, we have to say yes, this does use amateur radio bands, and yes, they’re using (optional) encryption, and no, the team behind Airchat isn’t complying with all FCC and other amateur radio rules and regulations. Officially, we have to say the FCC (and similar agencies in other countries) have been granted the power – by the people – to regulate the radio spectrum, and you really shouldn’t disobey them. Notice the phrasing in that last sentence, and draw your own philosophical conclusions.

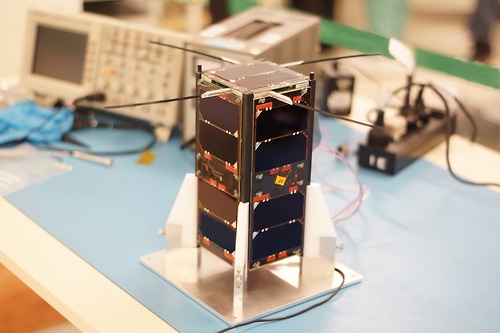

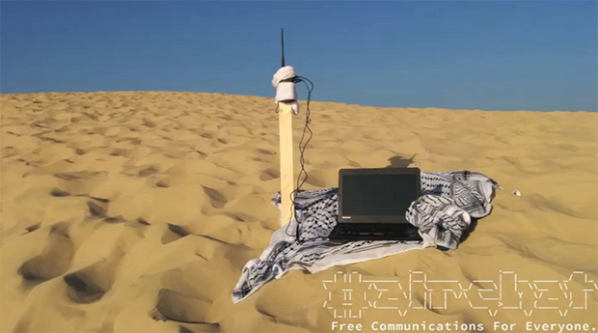

Airchat uses an off the shelf amateur transmitter, a Yaesu 897D in the example video below although a $30 Chinese handheld radio will do, to create a mesh network between other Airchat users running the same software. The protocol is based on the Lulzpacket, a few bits of information that give the message error correction and a random code to identify the packet. Each node in this mesh network is defined by it’s ability to decrypt messages. There’s no hardware ID, and no plain text transmitter identification. It’s the mesh network you want if you’re under the thumb of an oppressive government.

Airchat has already been used to play chess with people 180 miles away, controlled a 3D printer over 80 miles, and has been used to share pictures and voice chats. It’s still a proof of concept, and the example use cases – NGOs working in Africa, disaster response, and expedition base camps – are noble enough to not dismiss this entirely.

Continue reading “Airchat, The Wireless Mesh Network From Lulzlabs” →