In the 1966 science fiction movie Fantastic Voyage, medical personnel are shrunken to the size of microbes to enter a scientist’s body to perform brain surgery. Due to the work of this year’s winners of the Nobel Prize in Physics, laser tools now do work at this scale.

Arthur Ashkin won for his development of optical tweezers that use a laser to grip and manipulate objects as small a molecule. And Gérard Mourou and Donna Strickland won for coming up with a way to produce ultra-short laser pulses at a high-intensity, used now for performing millions of corrective laser eye surgeries every year.

Here is a look at these inventions, their inventors, and the applications which made them important enough to win a Nobel.

How Optical Tweezers Work

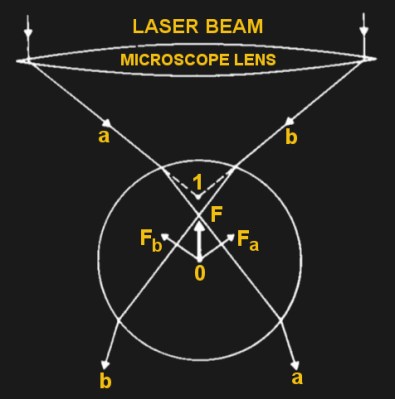

Here’s how optical tweezers work. The laser light passes through an objective lens while the lens focuses the light at a point inside the particle to be held. Explanations for what happens next depends on the particle’s diameter.

If the diameter is significantly greater than the light’s wavelength then ray optics can be used to explain how it works. Due to refraction, the light changes direction as it passes from the surrounding medium, water for example, into the particle and vice versa. Light carries momentum and the change in direction implies a change in the momentum of the light. This change in momentum produces a net force on the particle which moves it toward the focal point.

If instead, the particle has a much smaller diameter than the light’s wavelength then the particle can instead be treated as an electric dipole. The narrow area around the light’s focal point has a strong electric field which weakens as it gets further from that point, forming electric field gradients on both sides. These gradients move the particle toward the focal point.

However, in both cases there is also the force of the light on the particle itself to be considered, called a scattering force. Photons have momentum and as photons impact the particle, they impart some of that momentum to it. This causes the center of the particle to be slightly ahead of the focal point.

The result is something resembling the tractor beams of science fiction but on a much smaller scale.

Arthur Ashkin: The Man Behind The Tweezers

Arthur Ashkin, born 1922, received a B.S. degree in physics from Columbia University and his Ph.D. from Cornell. During World War II he worked on magnetrons for U.S. military radar systems and met Hans Bethe and Richard Feynman through his brother, Julius, who worked on the Manhattan Project.

In 1952, he went to work for Bell Labs where he remained, retiring in 1992. There he worked in the area of microwaves until 1961, when he switched to laser research.

That radiation could apply pressure was shown experimentally in the early 1900s but the effect was weak. Lasers, however, allowed for more powerful effects and in 1970, Ashkin showed that laser light focused into a narrow beam could produce scattering forces which moved small dielectric particles in both air and water. He also showed that particles could be drawn transversely to the center of the beam due to surrounding intensity gradients. These discoveries led to his using two opposing beams to trap particles and then a single-beam effect wherein the force of the beam on the particle was countered by the force of gravity. Finally, in 1986 he demonstrated the all-optical single-beam trap now known as the optical tweezer.

Using Optical Tweezers

Within a few years of their invention, Ashkin and other researchers had used optical tweezers to trap and manipulate neutral atoms, viruses, bacteria, yeast cells, human red blood cells, and more. They’ve been used for biological research extensively since.

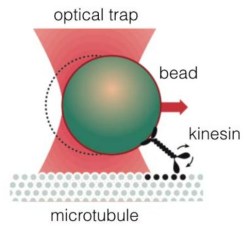

One method for manipulating molecules is to attach a micron-sized polystyrene or silica bead to the molecules and manipulate the easily trapped bead. A good sample application of this is for understanding the kinetics and mechanics of molecular motors, motors which convert chemical energy into linear or rotational motion. The protein kinesin, for example, uses these motors to move along microtubule filaments within cells to transport cellular cargo. By attaching a bead to kinesin, its step size and the force it produces can be measured.

At times, dual traps are used for holding on to opposite ends of a protein or DNA molecule. Early tests of DNA included measuring elasticity and relaxation and inducing sharp forces to transition to an extended form of DNA.

At times, dual traps are used for holding on to opposite ends of a protein or DNA molecule. Early tests of DNA included measuring elasticity and relaxation and inducing sharp forces to transition to an extended form of DNA.

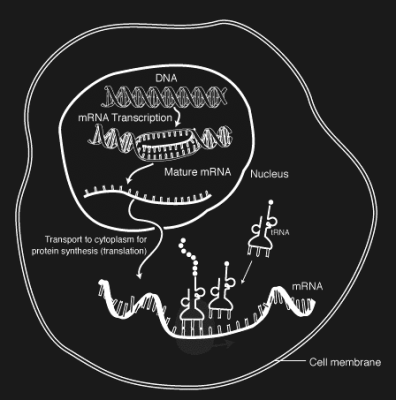

Resolution has improved such that single-step movement of the motor RNA polymerase along DNA base pairs can be followed. Motor RNA polymerase copies DNA into mRNA as one step in producing proteins within cells. Further processing of the mRNA by ribosomes has also been followed using optical tweezers.

It’s for the invention of the optical tweezers and their application to the study of biological systems which Arthur Ashkin received the 2018 Nobel Prize in Physics.

High-Intensity, Ultra-Short Optical Pulses

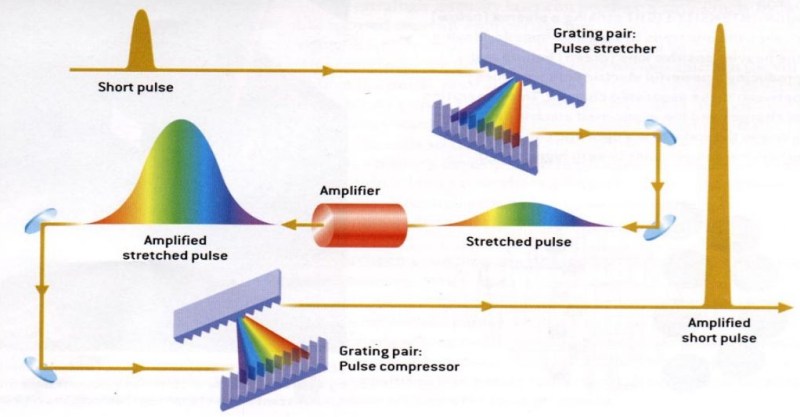

That prize was shared with Gérard Mourou and Donna Strickland for coming up with a method to generate ultra-short optical pulses using lasers but at high intensity in 1985. The technique is called chirped pulse amplification (CPA). Prior to this, it was possible to relatively inexpensively produce ultra-short pulses but increasing their intensity was hampered by the fact that doing so damaged the amplifier and optical components. Getting around this problem involved using a large beam diameter but this increased cost and meant firing only a few shots per day in order to give the amplifier time to cool down.

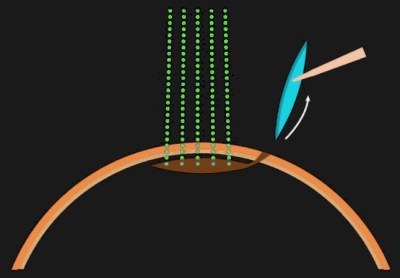

The solution which Mourou and Strickland came up with, and which is shown in the diagram, was to first stretch the short pulse in time several orders of magnitude. This then allowed for amplification without damaging the amplifier. The wide, amplified pulse was then compressed again to its original duration, giving it a higher intensity in the terawatts.

Strickland And Mourou: The Laser Jock And The Teacher

Donna Strickland is the first female Nobel Physics laureate since Maria Goeppert-Mayer became one in 1963 and the only other was Marie Curie in 1903.

Strickland would fit right in here with Hackaday readers. While attending McMaster University in Canada, she and her fellow grad students referred to themselves as “laser jocks”. She feels this is because they were good with their hands.

As an experimentalist you need to understand the physics but you

also need to be able to actually make something work, and the lasers were very finicky in those days.

After graduating, she moved to the US to get her Ph.D. at the University of Rochester.

Gérard Mourou is a French scientist having received his Ph.D. from Pierre and Marie Curie University in Paris in 1973. It was in 1977 that he became a Professor at the University of Rochester where he met Strickland.

The inspiration for CPA came from the long radio waves of radar, but it took a few years for the two of them to make work with light. At the time, they stretched the light by passing it through a long fiber-optic cable. At one time when working with a 2.5 km (1.55 mile) cable, no light came out the other end. There was a break in the cable somewhere. They settled for 1.4 km (0.86 miles) instead. By 1985, they got it to work and published their paper. Over the next few years, the fiber was replaced with a pair of diffraction gratings and the amplification was increased from nanojoules to joules.

Applications For CPA

CPA has found a number of uses, including probing the activities of electrons. This is made possible by sufficiently powerful femtosecond and attosecond lasers.

One development still in progress but which will have medical application someday is laser plasma acceleration, something which is currently the domain of radio frequency (RF) accelerators. It works by injecting electrons into a plasma channel and using a laser to accelerate them down the channel. Recently a petawatt-class laser at Lawrence Berkeley National Laboratory accelerated electrons to 4.2 GeV in a distance of just 9 cm.

But the application in which CPA has had the biggest effect is in laser eye surgery, of which millions are performed every year. The very brief cuts minimize damage due to heating and of course, the extreme precision is also of benefit.

Noble Nobel Winners

According to Alfred Nobel’s will in which he initiated the foundation of the prize, it was to reward those who serve humanity. And so while we can talk whimsically about how this year’s winners produced inventions which have made science fiction a reality, they were selected for the applications in which those inventions have found use. For our intricate understanding of the astonishing workings within cells and for the millions who can now see better, we think this year’s prize was well won.

I am excited to hear of the many applications for lasers. Sounds like the tweezers could maybe remove the druzy inside my eyeball!

Are we just talking about holding something in place for a brief moment or can the scientist hold it indefinitely? If the latter then how much heating does it cause? Actually, I guess that question holds either way. I’m used to using lasers to cut and/or burn things not hold them!

Basically no explanation of CPA relative to the explanation given for optical tweezers. It appears monochromatic light is heading into the first grating, which wouldn’t obviously produce polychromatic light output, and why the output would be stretched/smeared in time is also ignored here (key details seemingly).

It’s not monochromatic. Femtosecond lasers produce broadband pulses (the shorter a pulse is in the time domain, the broader it has to be in the frequency domain). Then the first diffraction grating spread out the different wavelength components in space, and the second one recollimates them. The trick is that the pair of gratings are at an angle, so the different wavelength components have different distances to travel before they’re collimated together again. This is what stretches the pulse.

Essentially the CPA designs ensure that everywhere in the optical path, peak power density is relatively low, by spreading out the energy over a long time. The only part of the path that sees the full intensity of the final pulse is the compressor at the end : usually in very high-power systems the compressor is in a vacuum chamber, because a single speck of dust would absorb enough light to cause a catastrophic failure. Also at very high power, the laser is strong enough to ionize the air which leads to scattering of the beam and significant losses.

A short pulse of light is ALWAYS polychromatic. The only way you can get truly monochromatic light is if the source is on forever; for finite pulses, the source has a fixed bandwidth that is related to the pulse length. You can see this by just computing the Fourier transform of the electric field: the only way to get a single frequency is if the signal is a sinusoid (going from minus infinity to infinity along the time axis!). Any different signal form is always a mixture of multiple frequencies.

Specifically for short (picosecond) pulses the model is usually that of a sinusoid (with the base frequency of the laser), multiplied with a Gaussian. In the Fourier domain, this corresponds to a Dirac peak convolved with a Gaussian. The end result is a Gaussian frequency distribution, centered at the base frequency.

I should add that this is the uncertainty principle in action: you simply cannot have an electromagnetic wave that is strongly localized in time AND frequency…

Thank you, very interesting

Thanks Wolfgang and Louis, perfect replies. Fits exactly with my understanding of things like going up in slew rate with common electronic amplifiers.

” Recently a petawatt-class laser at Lawrence Berkeley National Laboratory accelerated electrons to 4.2 GeV in a distance of just 9 cm.”

Supercolliders shrunk down to a desktop, without the supercollider budget.

To bad this couldn’t be used as a practical approach to a trash compactor on one’s desk top. e.g. beam the paper wad & watch it be compacted down to size.

one of the reasons laser based electron accelerators have not gone mainstream is the acceleration is energy dispersive ( and the beam physically broadens / defocuses ) so far, and it is hard to synchronize cascading accelerators for far higher acceleration. There are actually are a number of research groups working in this area for over a decade? UCLA has been somewhat prominent ( ?Chan Joshi )

Not sure on the budget… There’s still the petawatt laser… And that one won’t take 9cm….

Remember it’s a collision of electrons, you still need a long distance to accelerate hadrons.

Some of the explanation of Laser Trapping “by light pressure” is possibly bogus. Fundamentally laser trapping creates an ambient pressure / density gradient in the media / water etc and this is what traps the particles, not per se the light itself. The pressure of light is insignificant, the heating by focused light can be huge.

An alternative means of laser induced trapping for cell manipulation was devised by Dr. Rod Taylor of the Canadian NRC National Research Council in the 90s. Essentially an air bubble was formed / held at the end of a silica fiber optic and the bubble trapped was able to manipulate cells by meniscus attraction to cells. You can see some of this here

http://mark-nano.blogspot.com/2005/12/fiber-based-laser-tweezers-nsom-probes.html

The bubble is formed by a kind of degassing in proximity to the end of the fiber ( laser emitting point ) and it is not per say functioning by laser pressure, but by reducing water density / degassing locally. The bubble was held on the end of the fiber in part by and etched divot embedded at the end of the fiber core ( etch selectivity where the wet etch used etched the fiber core faster than the cladding )

The methods used were a spinoff of a modestly novel means to fabricate NSOM AFM style probes, when the special fiber to make wet etched ?20nm glass tips, the supply of fiber ran out, and the biological laser bubble trapping method was developed using more common glass optical fibers. Rod published a bit on this, no commercial instrument was developed but should have been a tool for biologists doing cell work. The softest means of holding / moving cells. And simple to homebrew with appropriate HF based etchants

I proof it twice (in the editor and then in the preview) before handing it off to an editor (not counting the multiple readings during final cleanup). The editor then proofs it again while editing (I don’t know their exact process). And then I read their changes so that I can avoid repeating whatever stupid mistakes they find and to pick up any tips. Despite all that, stuff still happens it seems.

I blame packet collisions and lossy data compression :-)

Man, we try. Steven is a _very_ conscientious writer. And I try to read as closely as possible when editing. That errors remain is on me — I edited this one.

It’s hard to catch everything, especially the the missing and doubled words that your brain automatically fills in. This is doubly true for a longer piece like this, where thousands of words are being written. (If there are two missing words, it’s 99.9% perfect!)

But getting the content right — not physics textbook, but not fuzzy either — is difficult, and is significantly more important. And I think Steven nailed it on this one.

Still, we try to avoid silly typos. What sentences do you mean in particular? I’ll go in and fix them.

(“Proofread” is one word. So is “anymore”.)

Not telling you how to do your job just a general comment on proofreading. Something that really helps me editing is reading for content and wording, and consciously verbalizing each word for proofreading. By verbalizing, even if you don’t actually say the word, but mouth each word individually, your brain will catch those errors instead of fixing them. It takes a little longer but if I do it by paragraph as I write then I usually end up with very few errors when editing.

Hmmm… Maybe reading the text with narrower screen widths would help too. I first read Elliot’s comment in the WordPress notifications, which is pretty narrow, and missed the “the the” but they stand out when reading them on a desktop’s wide screen. (No pun intended when I wrote the ‘the “the the”‘ above — pun intended.)

Another really neat use for lasers is in optical doppler cooling. Here is a good explanation of the technique…

https://www.youtube.com/watch?v=hFkiMWrA2Bc

Light DOES NOT EXERT PRESSURE. A laser hitting an object will create a hot side and cold side of the media surrounding. The differential temperature often will create a nanoscale density difference, which exerts a force, Nobel prize judges can be wrong technically. The Physics Nobel Judge misinterpreted a UC Berkeley Paper on a purported means to orient nanowires floating onto a substrate deposited on from a floating nanowire reactor (fluidized bed growth),

He could not see the claims of Berkeley were refuted in the very SEM Electro / micrograph included with the peer reviewed paper showing random orientation on the substrate despite claims otherwise, Hmmph. ( or in swedish collooquial) the Physics Noble Prize judge is a (stupid) Harry Simpleton with limited technical comprehension outside of his actual specialization ( MOCVD epitaxial crystal growth really ). Laser Tweezers merit a nobel prize, the popular interpretation of physics mechanism is bogus ( light exerts no useful pressure alone, the surrounding media does the yeomans force / pressure work )

FWIW I tried posting 2x prior here and never appeared. I am a physics process engineer in nano and microtechnology and have solved factory wide yield probelsm both at Intel and Motorola in spare time almost, 3 patents issued a few more filed. Folks with high formal credentials can make mistakes, or not solve problems tasked to do,

I proudly do not play in software, it is largely less interesting than physics / process engineering

Nanotech can be fun but on occasion inappropriate impractical or even irrelevant

https://en.wikipedia.org/wiki/Radiation_pressure