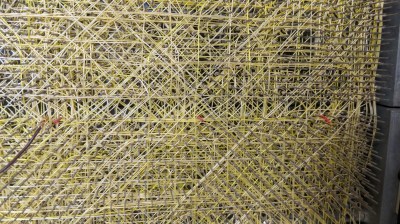

In the depths of Etsy and Pinterest is a fascinating, if tedious, artform. String art, the process of nailing pins in a board and wrapping thread around the perimeter to create shapes and shading, The most popular project in this vein is something like putting the outline of a heart, in string, in the shape of your home state. Something like that, at least.

While this artform involves about as much effort as pallet wood furniture, there is an interesting computational aspect of it: you can create images with string art, and doing this is a very, very hard problem to solve with an algorithm. Researchers at TU Wien have brought out the best that string art has to offer. They’ve programmed an industrial robot to create portraits out of string.

The experimental setup for this is about as simple as it gets. It’s a circular frame studded with 256 hooks around the perimeter. An industrial robot arm takes a few kilometers of thread winds a piece of string around one of these hooks, then travels to another hook. Repeat that thousands and thousands of times, and you get a portrait of Ada Lovelace or Albert Einstein.

The real trick here is the algorithm that takes an image and translates it into the paths the string will take. This is an NP-hard problem, but it is a surprisingly well-studied problem. The first autorouters — the things you should never trust to route traces between the packages on your PCB — we created for wire wrapped computers. Here, computers would find the shortest path between whatever pins had to be connected together. There were, of course, limitations: pins could only have so many connections on them thanks to the nature of wire wrapping, and you couldn’t have one gigantic mass of wires for a parallel bus. The first autorouters were string art algorithms, only in reverse.

You can take a look at the complete publication here.

You’ll also find prior art (tee-hee) in our own pages. Here is an artist doing it by hand, and here’s a machine to do it for you if you’re lazy. We’ve even seen further work on the underlying algorithm on Hackaday.io.