Harvesting delicate fruit and vegetables with robots is hard, and increasingly us humans no longer want to do these jobs. The pressure to find engineering solutions is intense and more and more machines of different shapes and sizes have recently been emerging in an attempt to alleviate the problem. Additionally, each crop is often quite different from one another and so, for example, a strawberry picking machine can not be used for harvesting lettuce.

A team from Cambridge university, UK, recently published the details of their lettuce picking machine, written in a nice easy-to-read style and packed full of useful practical information. Well worth a read!

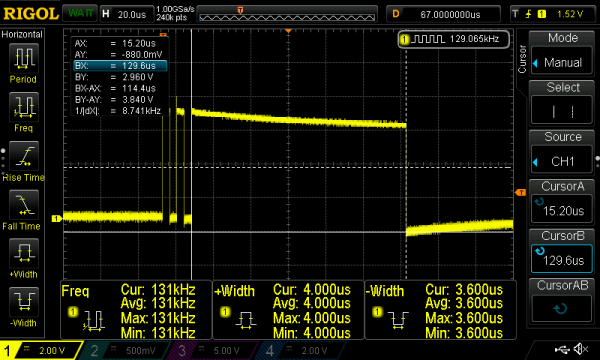

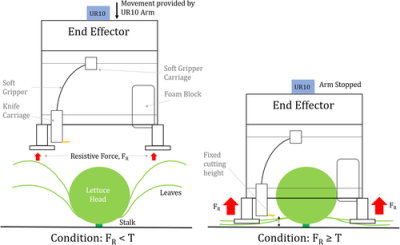

The machine uses YOLO3 detection and classification networks to get localisation coordinates of the crop and then check if it’s ready for harvest, or diseased. A standard UR10 robotic arm then positions the harvesting mechanism over the lettuce, getting force feedback through the arm joints to detect when it hits the ground. A pneumatically actuated cutting blade then attempts to cut the lettuce at exactly the right height below the lettuce head in order to satisfy the very exacting requirements of the supermarkets.

Rather strangely, the main control hardware is just a standard laptop which handles 2 consumer grade USB cameras with overall combined detection and classification speeds of about 0.212 seconds. The software is ROS (Robot Operating System) with custom nodes written in Python by members of the team.

Although the machine is slow and under-powered, we were very impressed with the fact that it seemed to work quite well. This particular project has been ongoing for several years now and the machine rebuilt 16 times! These types of machines are currently (2019) very much in their infancy and we can expect to see many more attempts at cracking these difficult engineering tasks in the next few years.

We’ve covered some solutions before, including: Weedinator, an autonomous farming ‘bot, MoAgriS, an indoor farming rig, a laser-firing fish-lice remover, an Aussie farming robot, and of course the latest and greatest from FarmBot.

Video after the break:

Continue reading “Robot Harvesting Machine Is Tip Of The Agri-Tech Iceberg”