R/C cars can be tons of fun, but sometimes the fun runs out after awhile. [Gaurav] got bored of steering around his R/C car with its remote, so he built an interface that lets him control the car using two different motion-detecting devices.

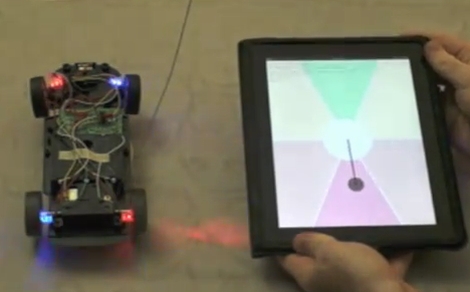

He built an HTML5 application for his iPad, which allows him to steer the car around. As you can see in the video below, the application utilizes the iPad’s tilt sensor to activate the car’s motors and steering depending on where on the screen he has moved the guide marker.

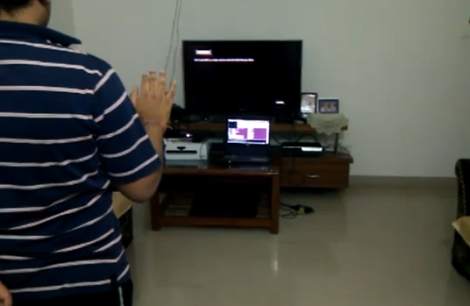

The second steering method he devised uses his Kinect sensor to track his movements. His hand gestures are mapped to a set of virtual spaces similar to those which the iPad uses. By moving his hands through these areas, the Arduino triggers the car’s remote just as it does with the iPad.

The actual remote control interface is achieved by wiring the car’s remote to an Arduino via a handful of opto-isolators. The Arduino is also connected to his computer via the serial port, where it waits for commands to be sent. In the case of the iPad, a Python server waits for commands to be issued from the HTML5 application. The Kinect’s interface is slightly different, with a C# application monitoring his movements and sending the commands directly to the serial port.

Check out the video below to see the car in action, and swing by his site if you are interested in grabbing some source code and giving it a try yourself.