Programming languages are generally defined as a more human-friendly way to program computers than using raw machine code. Within the realm of these languages there is a wide range of how close the programmer is allowed to get to the bare metal, which ultimately can affect the performance and efficiency of the application. One metric that has become more important over the years is that of energy efficiency, as datacenters keep growing along with their power demand. If picking one programming language over another saves even 1% of a datacenter’s electricity consumption, this could prove to be highly beneficial, assuming it weighs up against all other factors one would consider.

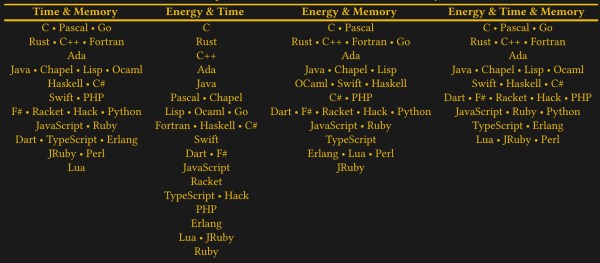

There have been some attempts over the years to put a number on the energy efficiency of specific programming languages, with a paper by Rui Pereira et al. from 2021 (preprint PDF) as published in Science of Computer Programming covering the running a couple of small benchmarks, measuring system power consumption and drawing conclusions based on this. When Hackaday covered the 2017 paper at the time, it was with the expected claim that C is the most efficient programming language, while of course scripting languages like JavaScript, Python and Lua trailed far behind.

With C being effectively high-level assembly code this is probably no surprise, but languages such as C++ and Ada should see no severe performance penalty over C due to their design, which is the part where this particular study begins to fall apart. So what is the truth and can we even capture ‘efficiency’ in a simple ranking?

Continue reading “Assessing The Energy Efficiency Of Programming Languages”