A recent edition of [Babbage’s] The Chip Letter discusses the obscurity of assembly language. He points out, and I think correctly, that assembly language is more often read than written, yet nearly all of them are hampered by obscurity left over from the days when punched cards had 80 columns and a six-letter symbol was all you could manage in the limited memory space of the computer. For example, without looking it up, what does the ARM instruction FJCVTZS do? The instruction’s full name is Floating-point Javascript Convert to Signed Fixed-point Rounding Towards Zero. Not super helpful.

But it did occur to me that nothing is stopping you from writing a literate assembler that is made to be easier to read. First, most C compilers will accept some sort of asm statement, and you could probably manage that with compile-time string construction and macros. However, I think there is a better possibility.

Reuse, Recycle

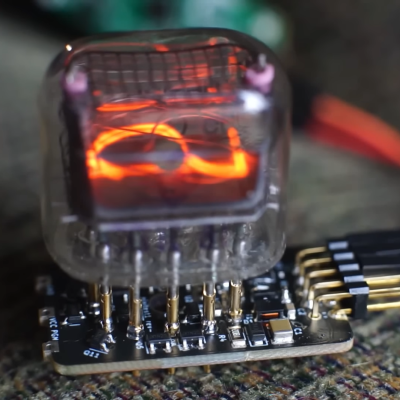

Since I sometimes develop new CPU architectures, I have a universal cross assembler that is, honestly, an ugly hack, but it works quite well. I’ve talked about it before, but if you don’t want to read the whole post about it, it uses some simple tricks to convert standard-looking assembly language formats into C code that is then compiled. Executing the resulting program outputs the desired machine language into a desired file format. It is very easy to set up, and in the middle, there’s a nice C program that emits machine code. It is not much more readable than the raw assembly, but you shouldn’t have to see it. But what if we started the process there and made the format readable?

At the heart of the system is a C program that lives in soloasm.c. It handles command line options and output file generation. It calls an external function, genasm with a single integer argument. When that argument is set to 1, it indicates the assembler is in its first pass, and you only need to fill in label values with real numbers. If the pass is a 2, it means actually fill in the array that holds the code.

That array is defined in the __solo_info instruction (soloasm.h). It includes the size of the memory, a pointer to the code, the processor’s word size, the beginning and end addresses, and an error flag. Normally, the system converts your assembly language input into a bunch of function calls it writes inside the genasm function. But in this case, I want to reuse soloasm.c to create a literate assembly language. Continue reading “A Literate Assembly Language”