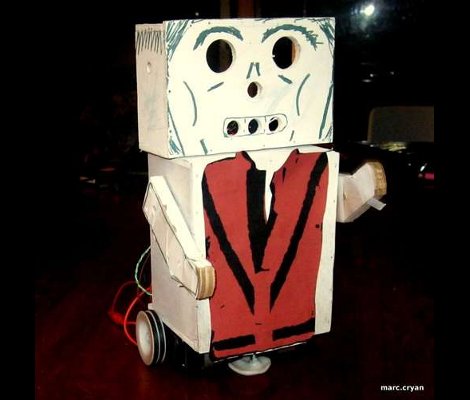

[Marc Cryan] built this little bugger which he calls Wendell the Robot. But what good is an animatronic piece like this unless you do something fun with it? That’s why you can catch the movements matching [Michael Jackson’s] choreography from the music video Thriller in the clip after the break.

This is a ground-up build for [Marc]. He started by designing templates for each of the wood parts using Inkscape. After printing them out, he glued each to a piece of 1/4″ plywood and cut along the lines using a band saw. We don’t have a lot of adhesive spray experience, but he mentions that the can should have directions for temporary adhesion so that the template can be removed after cutting.

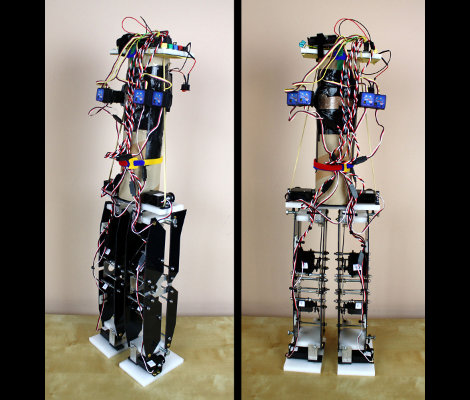

During assembly he makes sure to add servo horns for easy connection when adding the motors. All together he’s using five; two for the wheels, two for the arms, and one for the neck. A protoboard shield makes it easy to connect them to the Arduino which is used as a controller.