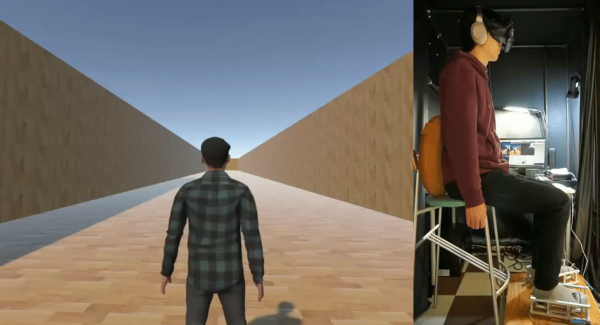

It’s not a jailbreak, but [basti564]’s Oculess software nevertheless allows one the option to remove telemetry and account dependencies from Facebook’s Oculus Quest VR headsets. It is not normally possible to use these devices without a valid Facebook account (or a legacy Oculus account in the case of the original Quest), so the ability to flip any kind of disconnect switch without bricking the hardware is a step forward, even if there are a few caveats to the process.

To be clear, the Quest devices still require normal activation and setup via a Facebook account. But once that initial activation is complete, Oculess allows one the option of disabling telemetry or completely disconnecting the headset from its Facebook account. Removing telemetry means that details about what apps are launched, how the device is used, and all other usage-related data is no longer sent to Facebook. Disconnecting will log the headset out of its account, but doing so means apps purchased from the store will no longer work and neither will factory-installed apps like Oculus TV or the Oculus web browser.

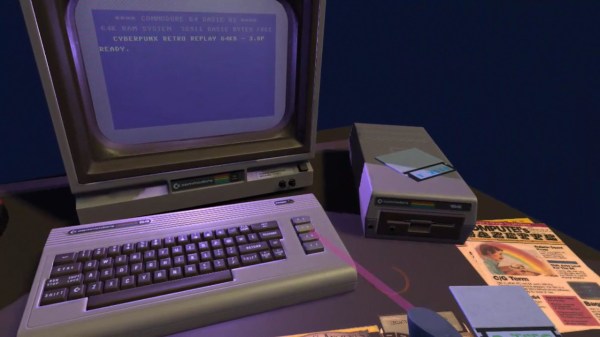

What will still work is the ability to sideload unsigned software, which are applications that are neither controlled nor distributed by Facebook. Sideloading isn’t on by default; it’s enabled by putting the headset into Developer Mode (a necessary step to installing Oculess in the first place, by the way.) There’s a fairly active scene around unsigned software for the Quest headsets, as evidenced by the existence of the alternate app store SideQuest.

Facebook’s control over their hardware and its walled-garden ecosystem continues to increase, but clearly there are people interested in putting the brakes on where they can. It’s possible the devices might see a full jailbreak someday, but even if so, what happens then?