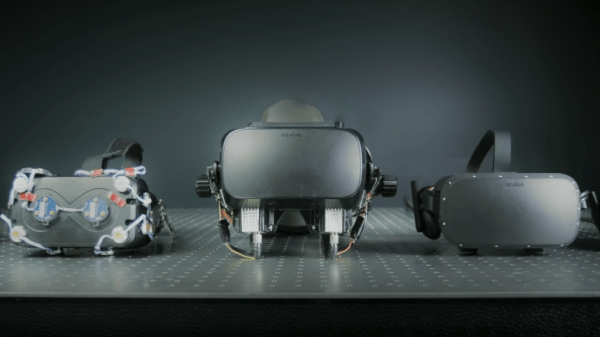

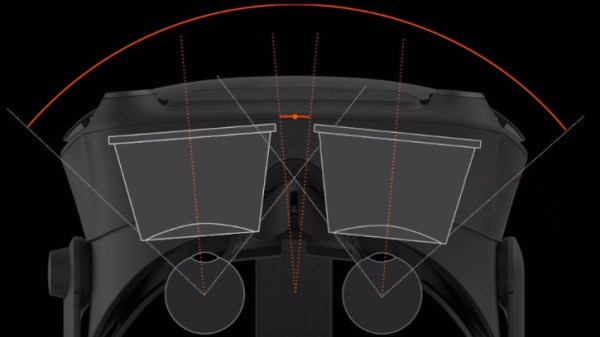

Want to see what exactly is inside the $500 (headset only price) Valve Index VR headset that was released last summer? Take a look at this teardown by [Ilja Zegars]. Not only does [Ilja] pull the device apart, but he identifies each IC and takes care to point out some of the more unique hardware aspects like the fancy diffuser on the displays, and the unique multilayered lenses (which are much thinner than one might expect.)

[Ilja] is no stranger to headset hardware design, and in addition to all the eye candy of high-res photographs, provides some insightful commentary to help make sense of them. The “tracking webs” pulled from the headset are an interesting bit, each is a long run of flexible PCB that connects four tracking sensors for each side of the head-mounted display back to the main PCB. These sensors are basically IR photodiodes, and detect the regular laser sweeps emitted by the base stations of Valve’s lighthouse tracking technology. [Ilja] also gives us a good look at the rod and spring mechanisms seen above that adjust distance between the two screens.

Want more? [Ilja] also has a gallery of high-resolution images available for those you who fancy a closer look. Also, if you missed it, we covered an examination of the Index’s optical design as part of everything you probably didn’t know about field of view in head-mounted displays.

[via Twitter]

For his project, [Harris Shallcross] used a small 0.95″ diagonal 96×64 color OLED as the display. The lens is from a knockoff Google Cardboard headset, and is held in a 3D printed piece that slides along a wire rail to adjust focus. The display uses a custom font and is driven by an STM32 microcontroller on a small custom PCB, with an HM11 BLE module to receive data wirelessly. Power is provided by a rechargeable lithium-ion battery with a boost converter. An Android app handles sending small packets of data over Bluetooth for display. The prototype software handles display of time and date, calendar, BBC news feed, or weather information.

For his project, [Harris Shallcross] used a small 0.95″ diagonal 96×64 color OLED as the display. The lens is from a knockoff Google Cardboard headset, and is held in a 3D printed piece that slides along a wire rail to adjust focus. The display uses a custom font and is driven by an STM32 microcontroller on a small custom PCB, with an HM11 BLE module to receive data wirelessly. Power is provided by a rechargeable lithium-ion battery with a boost converter. An Android app handles sending small packets of data over Bluetooth for display. The prototype software handles display of time and date, calendar, BBC news feed, or weather information. For [Tony]’s entry for The Hackaday Prize,

For [Tony]’s entry for The Hackaday Prize,