In recent years, quadrotors have exploded in popularity. They’ve become cheap, durable, and can do some really impressive things, but are they the most efficient design? The University of Queensland doesn’t think so.

Helicopters are still much more efficient and powerful due to their one big rotor, and with the swashplate mechanism, perhaps even more maneuverable — after all did you see our recent post on collective pitch thrust vectoring? And that was a plane! A few quick searches of helicopter tricks and we think you’ll agree.

The new design, which is tentatively called the Y4, or maybe a “Triquad” is still a quadrotor, but it’s been jumbled up a bit, taking the best of both worlds. It has a main prop with a swashplate mechanism, and three smaller rotors fixed at 45 degree angles, that provide the counter torque — It’s kind of like a helicopter with three tails.

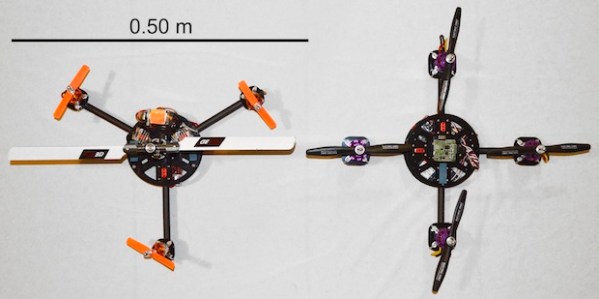

Regarding efficiency, the researchers expect this design could achieve an overall increase of about 25% in performance, compared to that of a standard quadrotor. So, they decided to test it and built a quad and a Y4 as similar as possible — the same size, mass, batteries, arms, and controller board. The results? The Y4 had an increased run time of 15%! They think the design could very well make the 25% mark, because in this test study, the Y4 was designed to meet the specifications of the quad, whereas a more refined Y4 without those limitations could perhaps perform even better.

Unfortunately there’s no video we can find, but if you stick around after the break we have a great diagram of how (and why) this design works!