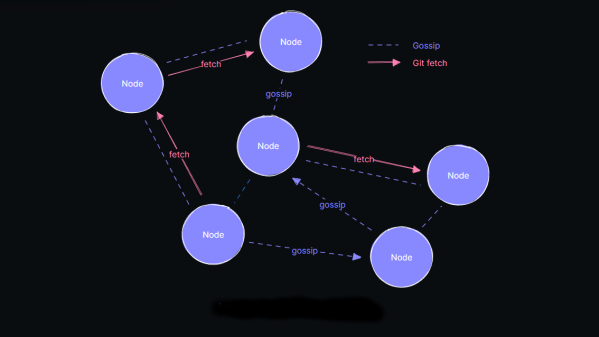

A recent security vulnerability — a potential ssh backdoor via the liblzma library in the xz package — is having a lot of analysis done on how the vulnerability was introduced, and [Rob Mensching] felt that it was important to highlight what he saw as step number zero of the whole process: exploit the fact that a stressed package maintainer has burned out. Apply pressure from multiple sources while the attacker is the only one stepping forward to help, then inherit the trust built up by the original maintainer. Sadly, [Rob] sees in these interactions a microcosm of what happens far too frequently in open source.

Maintaining open source projects can be a high stress activity. The pressure and expectations to continually provide timely interaction, support, and updates can easily end up being unhealthy. As [Rob] points out (and other developers have observed in different ways), this kind of behavior just seems more or less normal for some projects.

The xz/liblzma vulnerability itself is a developing story, read about it and find links to the relevant analyses in our earlier coverage here.