Synesthesia is a mix-up in the wiring of the brain where sensory inputs are perceived differently than what ‘normal people’ usually experience. People with synesthesia can have visual input mapped to aural perception in the mind, or driving along a highway where there’s a recent skunk roadkill can smell ‘loud.’ It’s an interesting way of perceiving the world that’s usually inaccessible to most of the population, but the Syneseizure tries to replicate this way of viewing the world.

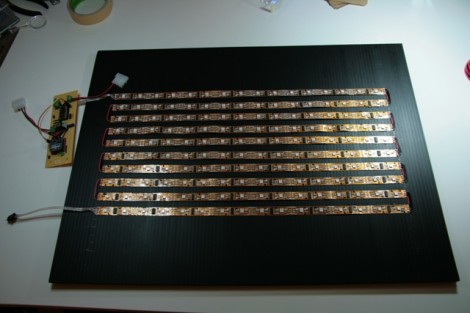

There’s a bunch of types of synesthesia (Led Zeppelin feels purple, or apples smelling further away than grapes), but [Greg] and his team needed to choose one subtype to reduce the complexity of their project. They chose mapping visual input to touch sensation. This was accomplished by attaching a dozen speakers to the test subject’s face. A webcam recorded where the subject was looking at and with a Processing sketch, the webcam was reduced to a grayscale 4×3 pixel grid. The intensity of the each pixel corresponded to the strength of buzzing in each speaker. All that was left to do is put a mask over the subject and have them walk around.

The Syneseizure was built for Science Hack Day San Francisco and ended up winning the people’s choice award. There’s a bunch of pics and a great write-up on the project website, so be sure to check that out.