[Reza] has been working on detecting hand gestures with LIDAR for about 10 years now, and we’ve got to say the end result is worth the wait.

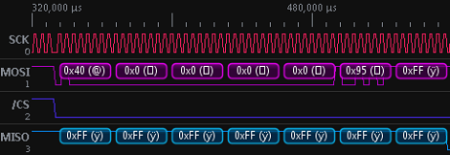

The build uses three small LIDAR sensors to measure the distance to an object. These sensors work by sending out an infrared pulse and recording the time of flight for a beam of light to be emmitted and reflected back to a light sensor. Basically, it’s radar but with infrared light. Three of these LIDAR sensors are mounted on a stand and plugged into an Arduino Uno. By measuring how far away an object is to each sensor, [Reza] can determine the object’s position in 3D space relative to the sensor.

Unlike the Kinect-based gesture applications we’ve seen, [Reza]’s LIDAR can work outside in the sun. Because each LIDAR sensor is measuring the distance a million times a second, it’s also much more responsive than a Kinect as well. Not bad for 10 years worth of work.

You can check out [Reza]’s gesture control demo, as well as a few demos of his LIDAR hardware after the break.