When most think of a microwave, they think of that little magic box that you can heat food in really fast. An entire industry of frozen foods has sprung up from the invention of the household microwave oven, and it would be difficult to find a household without one. You might be surprised that microwave ovens, or reactors to be more accurate, can also be found in chemistry labs and industrial complexes throughout the world. They are used in organic synthesis – many equipped with devices to monitor the pressure and temperature while heating. Most people probably don’t know that most food production facilities use microwave-based moisture solids analyzers. And there’s even an industry that uses microwaves with acids to dissolve or digest samples quickly. In this article, we’re going to look beyond the typical magnetron / HV power supply / electronics and instead focus on some other peculiarities of microwave reactors than you might not know.

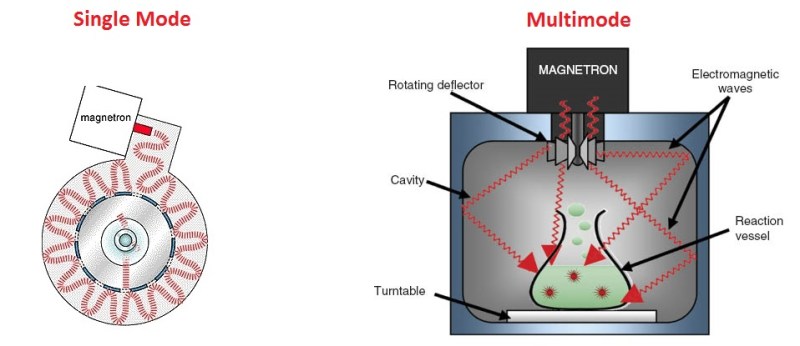

Single vs Multimode

The typical microwave oven in the millions of households across the world is known as multimode type. In these, the microwaves will take on typical wavelike behavior like we learned about in physics 101. They will develop constructive and destructive interference patterns, causing ‘hot spots’ in the cavity. A reader tipped us off to this example, where [Lenore] uses a popular Indian snack food to observe radiation distribution in a multimode microwave cavity. Because of this, you need some type of turntable to move the food around the cavity to help even out the cooking. You can avoid the use of a turn table with what is known as a mode stirrer. This is basically a metal ‘fan’ that helps to spread the microwaves throughout the cavity. They can often be found in industrial microwaves. Next time you’re in the 7-11, take a look in the top of the cavity, and you will likely see one.

Multimode microwaves also require an isolator to protect the magnetron from reflected energy. These work like a diode, and do not let any microwaves bounce back and hit the magnetron. It absorbs the reflected energy and turns it into heat. It’s important to note that all microwave energy must be absorbed in a multimode cavity. What is not absorbed by the food will be absorbed by the isolator. Eventually, all isolators will fail from the heat stress. Think about that next time you’re nuking a small amount of food with a thousand watts!

Single Mode microwaves are what you will find in chemistry and research labs. In these, the cavity is tuned to the frequency of the magnetron – 2.45GHz. This allows for a uniform microwave field. There is no interference, and therefore no hot or cold spots. The microwave field is completely homogenous. Because of this, there is no reflected energy, and no need for an isolator. These traits allow single mode microwaves to be much smaller than multimode, and usually of a much lower power as there is a 100% transfer of energy into the sample. While most multimode microwaves are 1000+ watts, the typical single mode will be around 300 watts.

Power Measurement

Most microwave ovens only produce one power level. Power is measured and delivered by the amount of time the magnetron stays on. So if you were running something at 50% power for 1 minute, the magnetron would be on for a total of 30 seconds. You can measure the output power of any microwave by heating 1 liter of water at 100% power for 2 minutes. Multiply the difference in temperature by 35, and that is your power in watts.

There are other types of microwaves that control power by adjusting the current through the magnetron. This type of control is often utilized by moisture solids analyzers, where are more precise control is needed to keep samples from burning.

Have you used a microwave and an arduino for something other than cooking food? Let us know in the comments!

Thanks to [konnigito] for the tip!