Tor is the household name in anonymous networks but the system has vulnerabilities, especially when it comes to an attacker finding out who is sending and receiving messages. Researchers at MIT and the École Polytechnique Fédérale de Lausanne think they have found a better way in a system called Riffle. You can dig into the whitepaper but the MIT news article does a great job of providing an overview.

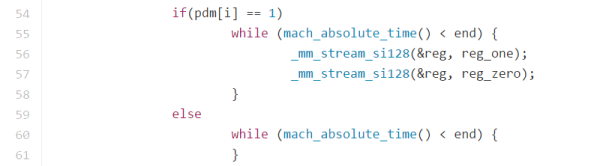

The strength at the core of Tor is the Onion Routing that makes up the last two letters the network’s name. Riffle keeps that aspect, building upon it in a novel way. The onion analogy has to do with layers of skins — a sending computer encrypts the message multiple times and as it passes through each server, one layer of encryption is removed.

Riffle starts by sending the message to every server in the network. It then uses Mix Networking to route the message to its final destination in an unpredictable way. As long as at least one of the servers in the network is uncompromised, tampering will be discovered when verifying that initial message (or through subsequent authenticated encryption checks as the message passes each server).

The combination of Mix Networking with the message verification are what is novel here. The message was already safe because of the encryption used, but Riffle will also protect the anonymity of the sender and receiver.

[via Engadget]