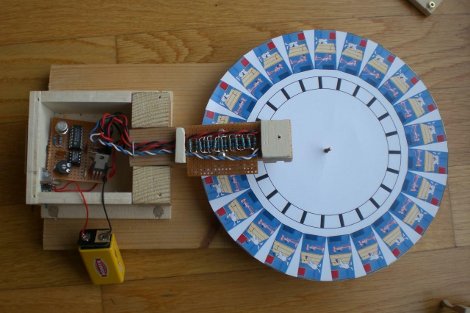

Drehkino is a Turntable Cinema that plays short (50 frames) looping animations from specially printed, disks, and is housed in a wooden frame similar to a record player. The paper disks are the frames of animation and an optical rotary encoder pattern, that pattern is picked up by a infrared pair scavenged from an old mouse. The signal is then passed onto a 555 timer configured as a Schmitt trigger that (indirectly) drives the led strobe light creating animation that is synced to the speed of the turn table.

That sounds all good and well, but it must be a big pain to split up an animation and calculate each frame’s position etc, well that is covered too by a couple scripts. Movie clips are sent though virtualdub to select what 50 frames you want, then are exported to individual images, an sh script then takes over and gawk is used to manipulate the data and create an ImageMagick (“CONVSCRIPT”) file. After you do the script dance you are left with a perfectly spaced wheel with encoder ready to print on standard paper in a PDF format.

Software and schematics included, with future improvements already in the works and its nifty, so its worth a check. This is an interesting take on the old zoetrope design.