The Kinect has long been able to create realistic 3D models of real, physical spaces. Combining these Kinect-mapped spaces with an Oculus Rift is something brand new entirely.

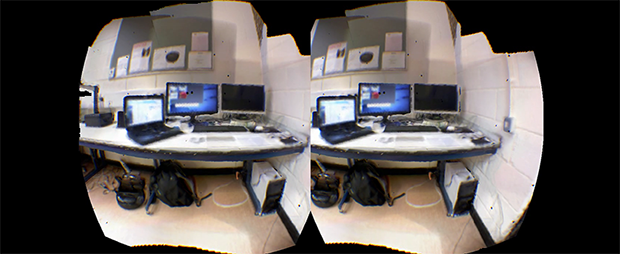

[Thomas] and his fellow compatriots within the Kintinuous project are modeling an office space with the old XBox 360 Kinect’s RGB+D sensors. then using an Oculus Rift to inhabit that space. They’re not using the internal IMU in the Oculus to position the camera in the virtual space, either: they’re using live depth sensing from the Kinect to feed the Rift screens.

While Kintinuous is very, very good at mapping large-scale spaces, the software itself if locked up behind some copyright concerns the authors and devs don’t have control over. This doesn’t mean the techniques behind Kintinuous are locked up, however: anyone is free to read the papers (here’s one, and another, PDF of course) and re-implement Kintinuous as an open source project. That’s something that would be really cool, and we’d encourage anyone with a bit of experience with point clouds to give it a shot.

Video below.

Continue reading “Virtual Physical Reality With Kintinuous And An Oculus Rift”