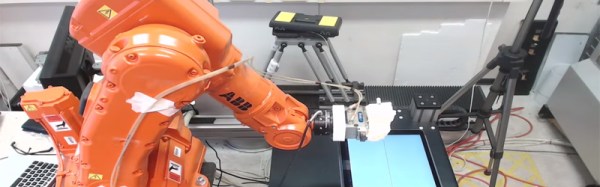

Recently, [Jeff Geerling] dropped into the bad press feeding frenzy around Sipeed’s NanoKVM, most notably because of a ‘hidden’ microphone that should have no business on a remote KVM solution. The problem with that reporting is, as [Jeff] points out in the video below, that the NanoKVM – technically the NanoKVM-Cube – is merely a software solution that got put on an existing development board, the LicheeRV Nano, along with an HDMI-in board. The microphone exists on that board and didn’t get removed for the new project, and it is likely that much of the Linux image is also reused.

Of course, the security report that caused so much fuss was published back in February of 2025, and some of the issues pertaining to poor remote security have been addressed since then on the public GitHub repository. While these were valid concerns that should be addressed, the microphone should not be a concern, as it’d require someone to be logged into the device to even use it, at which point you probably have bigger problems.

Security considerations aside, having a microphone in place on a remote KVM solution could also be very useful, as dutifully pointed out in the comments by [bjoern.photography], who notes that being able to listen to beeps on boot could be very useful while troubleshooting a stricken system. We imagine the same is true for other system sounds, such as fan or cooling pump noises. Maybe all remote KVM solutions should have microphone arrays?

Of course, if you don’t like the NanoKVM, you could always roll your own.

Top image: the NanoKVM bundle from [Jeff]’s original review. (Credit: [Jeff Geerling])

Continue reading “The ‘Hidden’ Microphone Inside The Sipeed NanoKVM”