Deep in the dark recesses of Internet advertisers and production studios, there’s a very, very strange device. It fits on a user’s head, has a camera pointing out, but also a camera pointing back at the user. That extra camera is aimed right at the eye. This is a gaze tracking system, a wearable robot that looks you in the eye and can tell you exactly what you were looking at. It’s exactly what you need to tell if people are actually looking at ads.

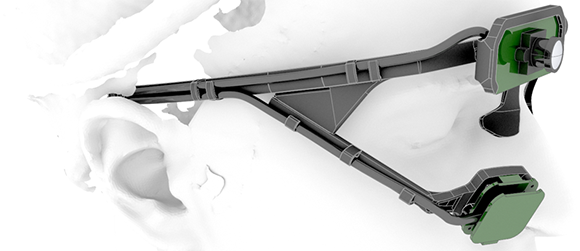

For their Hackaday Prize entry, Makeroni Labs is building an open source eye tracking system. It’s exactly what you want if you want to test how ‘sticky’ a webpage is, or – since we’d like to see people do something useful with their Hackaday Prize projects – for handicapped people that can not control their surroundings.

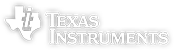

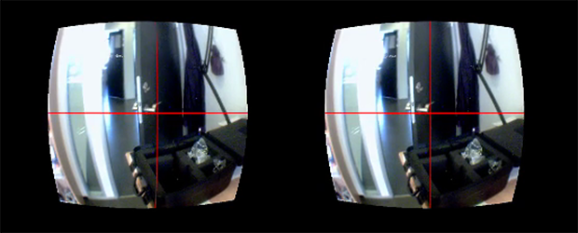

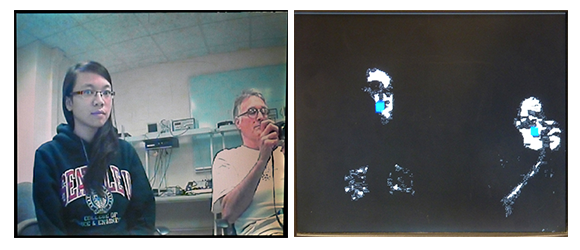

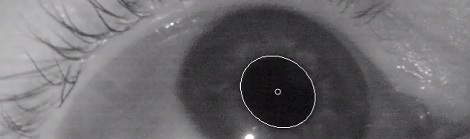

There are really only a few things you need to build an eye tracking camera – a pair of cameras and a bit of software. The cameras are just webcams, with the IR filters removed and a few IR LEDs aimed at the eye so the eye-facing camera can see the pupil. The second camera is pointed directly ahead, and with a bit of tricky math, the software can figure out where the user is looking.

The electronics are rather interesting, with all the processing running on a VoCore It’s Linux, though, and hopefully it’ll be fast enough to capture two video streams, calculate where the pupil is looking, and send another video stream out. As far as the rest of the build goes, the team is 3D printing everything and plans to make the design available to everyone. That’s great for experimentations in gaze tracking, and an awesome technology for the people who really need it.