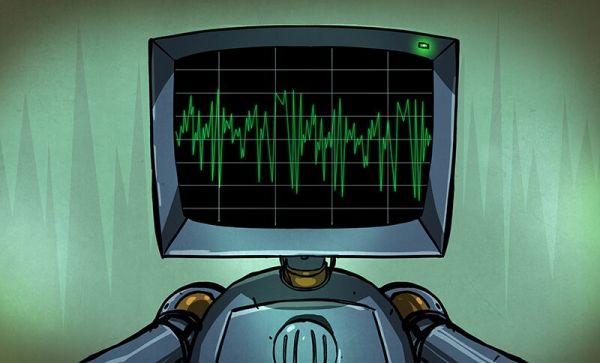

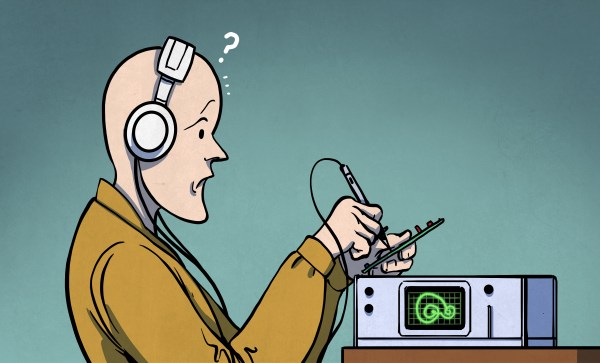

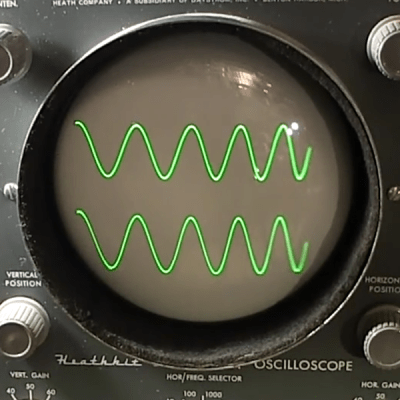

We’ve recently seen a couple reviews of a particularly cheap oscilloscope that, among other things, doesn’t meet its advertised specs. Actually, it’s not even close. It claims to be a 100 MHz scope, and it’s got around 30 MHz of bandwidth instead. If you bought it for higher frequency work, you’d have every right to be angry. But it’s also cheap enough that, if you were on a very tight budget, and you knew its limitations beforehand, you might be tempted to buy it anyway. Or so goes one rationale.

In principle, I’m of the “buy cheap, buy twice” mindset. Some tools, especially ones that you’re liable to use a lot, make it worth your while to save up for the good stuff. (And for myself, I would absolutely put an oscilloscope in that category.) The chances that you’ll outgrow or outlive the cheaper tool and end up buying the better one eventually makes the money spent on the cheaper tool simply wasted.

But that’s not always the case either, and that’s where you have to know yourself. If you’re only going to use it a couple times, and it’s not super critical, maybe it’s fine to get the cheap stuff. Or if you know you’re going to break it in the process of learning anyway, maybe it’s a shame to put the gold-plated version into your noob hands. Or maybe you simply don’t know if an oscilloscope is for you. It’s possible!

But that’s not always the case either, and that’s where you have to know yourself. If you’re only going to use it a couple times, and it’s not super critical, maybe it’s fine to get the cheap stuff. Or if you know you’re going to break it in the process of learning anyway, maybe it’s a shame to put the gold-plated version into your noob hands. Or maybe you simply don’t know if an oscilloscope is for you. It’s possible!

And you can mix and match. I just recently bought tools for changing our car’s tires. It included a dirt-cheap pneumatic jack and an expensive torque wrench. My logic? The jack is relatively easy to make functional, and the specs are so wildly in excess of what I need that even if it’s all lies, it’ll probably suffice. The torque wrench, on the other hand, is a bit of a precision instrument, and it’s pretty important that the bolts are socked up tight enough. I don’t want the wheels rolling off as I drive down the road.

Point is, I can see both sides of the argument. And in the specific case of the ’scope, the cheapo one can also be battery powered, which gives it a bit of a niche functionality when probing live-ground circuits. Still, if you’re marginally ’scope-curious, I’d say save up your pennies for something at least mid-market. (Rigol? Used Agilent or Tek?)

But isn’t it cool that we have so many choices? Where do you buy cheap? Where won’t you?