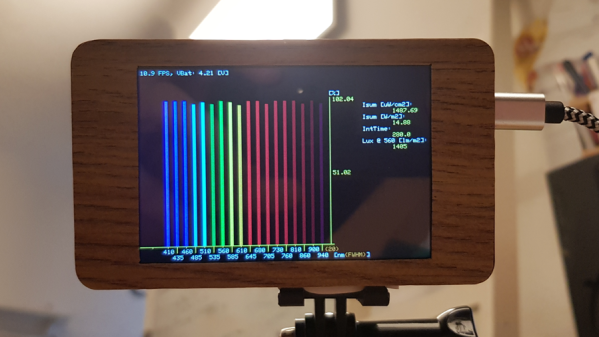

Many sci-fi movies and TV shows feature hand-held devices capable of sensing all manner of wonderful things. The µ Spec Mk II from [j] is built very much in that vein, packing plenty of functionality into a handy palm-sized form factor.

An ESP32 serves as the brains of the device, hooked up to a 480×320 resolution touchscreen display. On board is a thermal camera, with 32×24 pixel resolution from an MLX90640 sensor. There’s also a 8×8 LIDAR sensor, too, and a spectral sensor that can capture all manner of interesting information about incoming light sources. This can also be used to determine the transmission coefficient or reflection coefficient of materials, if that’s something you desire. A MEMS microphone is also onboard for capturing auditory data. As a bonus, it can draw a Mandelbrot set too, just for the fun of it.

Future plans involve adding an SD card so that data captured can be stored in CSV format, as well as expanding the sensor package onboard. It’s a project that reminds us of some of the tricorder builds we’ve seen over the years. Video after the break.

Continue reading “2022 Sci-Fi Contest: Multi-Sensor Measurement System” →