The notion of self driving cars isn’t new. You might be surprised at the number of such projects dating back to the 1920s. Many of these systems relied on external aids built into the roadways. It’s only recently that self driving cars on existing roadways are becoming closer to reality than fiction — increased computer processing power, smaller and power-efficient computers, compact Lidar and millimeter-wave Radar sensors are but a few enabling technologies. In South Korea, [Prof Min-hong Han] and his team of students took advantage of these technological advances and built an autonomous car which successfully navigated the streets of Seoul in several field trials. A second version subsequently drove itself along the 300 km journey from Seoul to the southern port city Busan. You might think this is boring news, until you realize this was accomplished back in the early 1990s using an Intel 386-powered desktop computer.

The project created a lot of buzz at the time, and was shown at the Daejeon Expo ’93 international exposition. Alas, the government eventually decided to cancel the research program, as it didn’t fit into their focus on heavy industries like ship building and steel production. Given the tremendous focus on self-driving and autonomous vehicles today, and with the benefit of hindsight, we wonder if that was the best choice. This isn’t the only decision from Seoul that seems questionable when viewed from the present — Samsung executives famously declined to buy Andy Rubin’s new operating system for digital cameras and handsets back in late 2004, and a few weeks later Android was purchased by Google.

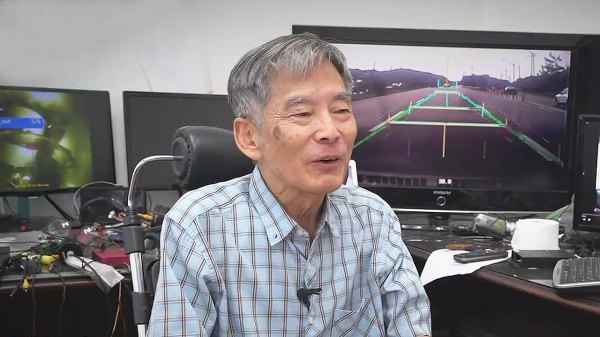

You should check out [Prof Han]’s YouTube channel showing videos of the car’s camera while operating in various conditions and overlaid with the lane recognition markers and other information. I’ve driven the streets of Seoul, and that alone can be a frightening experience. But [Han] manages to stretch out in the back seat, so confident in his system that he doesn’t even wear a seatbelt.

Continue reading “Forgotten Tech — Self Driving Cars” →