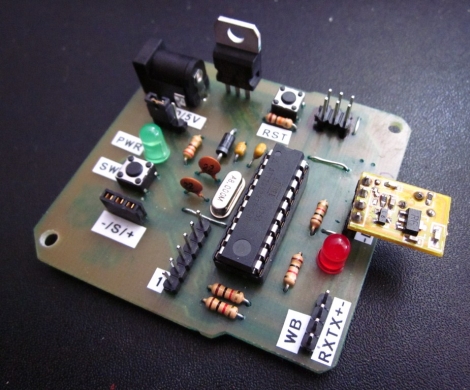

[FreddySam] had an old Omnitech GPS which he decided was worthy of being taken apart to see what made it tick. While he was poking around the circuit board he found a couple solder pads labeled as ‘MIC1’. This GPS didn’t have a microphone. So, why would this unit have a mic input unless there is a possibility for accepting voice commands? [FreddySam] was about to find out.

The first step to get the system working was to add a physical microphone. For this project one was scavenged from an old headset. The mini microphone was removed from its housing and soldered to the GPS circuit board via a pair of wires. Just having the mic hanging out of the case would have been unsightly so it was tucked away in an otherwise unfilled portion of the case. A hole drilled in the case lets external sounds be easily picked up by the internalized microphone.

The hardware modification was the easy part. Getting the GPS software to recognize the newly added mic was a bit of a challenge. It turns out that there is only one map version that supports voice recognition, an old version; Navigon 2008 Q3. We suppose the next hack is making this work with new map packs. This project shows how a little motivation and time can quickly and significantly upgrade an otherwise normal piece of hardware. Kudos to [FreddySam] for a job well done.