No, that isn’t a scene from a horror movie up there, it’s [Oliver Kreylos’] avatar in a 3D office environment. If he looks a bit strange, it’s because he’s wearing an Oculus Rift, and his image is being stitched together from 3 Microsoft Kinect cameras.

[Oliver] has created a 3D environment which is incredibly realistic, at least to the wearer. He believes the secret is in the low latency of the entire system. When coupled with a good 3D environment, like the office shown above, the mind is tricked into believing it is really in the room. [Oliver] mentions that he finds himself subconsciously moving to avoid bumping into a table leg that he knows isn’t there. In [Oliver’s] words, “It circumnavigates the uncanny valley“.

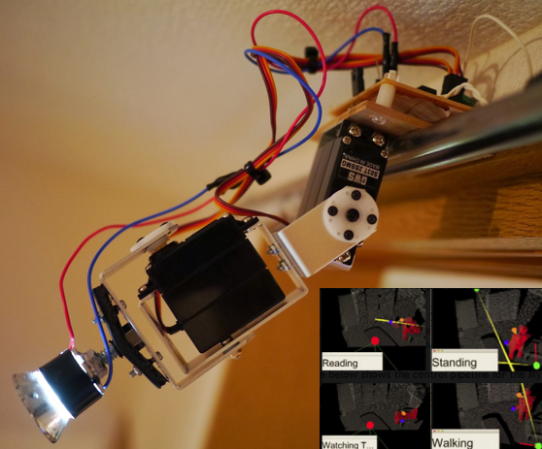

Instead of pulling skeleton data from the 3 Kinect cameras, [Oliver] is using video and depth data. He’s stitching and processing this data on an i7 Linux box with an Nvidia Geforce GTX 770 video card. Powerful hardware for sure, but not the cutting edge monster rig one might expect. [Oliver] also documented his software stack. He’s using Vrui VR Toolkit, the Kinect 3D Video Capture Project, and the Collaboration Infrastructure.

We can’t wait to see what [Oliver] does when he gets his hands on the Kinect One (and some good Linux drivers for it).

Continue reading “Virtual Reality Gets Real With 3 Kinect Cameras”