Cable harnesses made wire management a much more reliable and consistent affair in electronic equipment, and while things like printed circuit boards have done away with many wires, cable harnessing still has its place today. Here is a short how-to on how to lace cables from a 1962 document, thoughtfully made available on the web by [Gary Allsebrook] and [Jeff Dairiki].

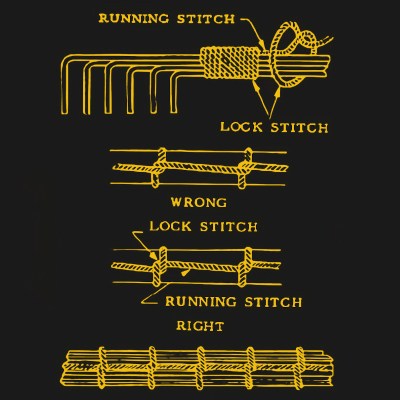

It’s a short resource that is to the point in all the ways we love to see. The diagrams are very clear and the descriptions are concise, and everything is done for a reason. The knots are self-locking, ensuring that things stay put without being overly tight or constrictive.

It’s a short resource that is to the point in all the ways we love to see. The diagrams are very clear and the descriptions are concise, and everything is done for a reason. The knots are self-locking, ensuring that things stay put without being overly tight or constrictive.

According to the document, the ideal material for lacing cables is a ribbon-like nylon cord (which reduces the possibility of biting into wire insulation compared to a cord with a round profile) but the knots and techniques apply to whatever material one may wish to use.

Cable lacing can be done ad-hoc, but back in the day cable assemblies were made separately and electrically tested on jigs prior to installation. In a way, such assemblies served a similar purpose to traces on a circuit board today.

Neatly wrapping cables really has its place, and while doing so by hand can be satisfying, we’ve also seen custom-made tools for neatly wrapping cables with PTFE tape.