The people at Two Bit Circus are at it again; this time with a futuristic racing simulator where the user controls the experience. It was developed by [Brent Bushnell] and [Eric Gradman] along with a handful of engineers and designers in Los Angeles, California. The immersive gaming chair utilized an actual racing seat in the design, and foot petals were added to give the driver more of a feeling like they were actually in a real race. Cooling fans were placed on top for haptic feedback and a Microsoft Kinect was integrated into the system as well to detect hand gestures that would control what was placed on the various screens.

The team completed the project within in thirty days during a challenge from Best Buy who wanted to see if they could create the future of viewing experiences. Problems surfaced throughout the time frame though creating obstacles surrounding the video cards, monitors, and shipping dates. They got it done and are looking towards integrating their work into restaurants like Dave & Buster’s and other facilities like arcades and bars (at least that’s the rumor going around town). The 5 part mini-series that was produced around this device can be seen after the break:

Continue reading “Custom Racing Chair With A Kinect And Haptic Feedback”

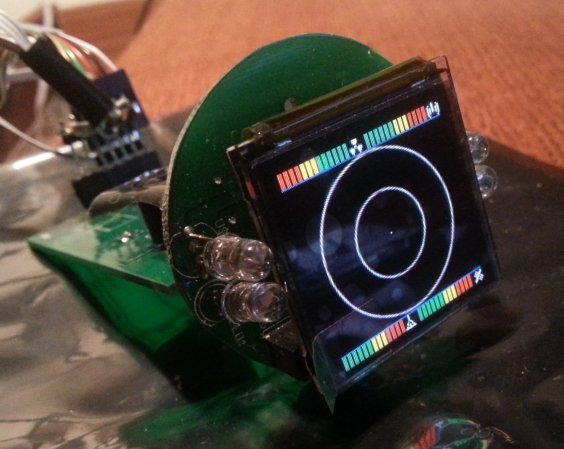

Being aware that oneself is in a dream can be a difficult moment to accomplish. But as [Rob] showed on his blog, monitoring the lucid experience once it happens doesn’t have to be costly. Instead, household items can be fashioned together to make a

Being aware that oneself is in a dream can be a difficult moment to accomplish. But as [Rob] showed on his blog, monitoring the lucid experience once it happens doesn’t have to be costly. Instead, household items can be fashioned together to make a