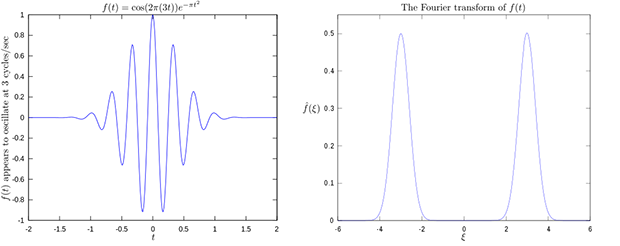

The Raspberry Pi has been around for two years now, and still there’s little the hardware hacker can actually do with the integrated GPU. That just changed, as the Raspberry Pi foundation just announced a library for Fourier transforms using the GPU.

For those of you who haven’t yet taken your DSP course, fourier transforms take a function (or audio signal, radio signal, or what have you) and output the fundamental frequency. It’s damn useful for everything from software defined radios to guitar pedals, and the new GPU_FFT library is about ten times faster at this task than the Raspi’s CPU.

You can get a copy of the GPU_FFT library by running rpi-update on your pi. If you happen to build anything interesting – something with a software defined radio or even a guitar pedal – you’re more than welcome to send it in to the Hackaday tips line. We’d love to see what you’re up to.