The future, as seen in the popular culture of half a century or more ago, was usually depicted as quite rosy. Technology would have rendered every possible convenience at our fingertips, and we’d all live in futuristic automated homes — no doubt while wearing silver clothing and dreaming about our next vacation on Mars.

Of course, it’s not quite worked out this way. A family from 1965 whisked here in a time machine would miss a few things such as a printed newspaper, the landline telephone, or receiving a handwritten letter; they would probably marvel at the possibilities of the Internet, but they’d recognise most of the familiar things around us. We still sit on a sofa in front of a television for relaxation even if the TV is now a large LCD that plays a streaming service, we still drive cars to the supermarket, and we still cook our food much the way they did. George Jetson has not yet even entered the building.

The Future is Here, and it Responds to “Alexa”

There’s one aspect of the Jetsons future that has begun to happen though. It’s not the futuristic automation of projects such as Disneyland’s Monsanto house Of The Future, but instead it’s our current stuttering home automation efforts. We’re not having domestic robots in pinnies hand us rolled-up newspapers, but we’re installing smart lightbulbs and thermostats, and we’re voice-controlling them through a variety of home hub devices. The future is here, and it responds to “Alexa”.

But for all the success that Alexa and other devices like it have had in conquering the living rooms of gadget fans, they’ve done a poor job of generating a profit. It was supposed to be a gateway into Amazon services alongside their Fire devices, a convenient household companion that would help find all those little things for sale on Amazon’s website, and of course, enable you to buy them. Then, Alexa was supposed to move beyond your Echo and into other devices, as your appliances could come pre-equipped with Alexa-on-a-chip. Your microwave oven would no longer have a dial on the front, instead you would talk to it, it would recognise the food you’d brought from Amazon, and order more for you.

Instead of all that, Alexa has become an interface for connected home hardware, a way to turn on the light, view your Ring doorbell on models with screens, catch the weather forecast, and listen to music. It’s a novelty timepiece with that pod bay doors joke built-in, and worse that that for the retailer it remains by its very nature unseen. Amazon have got their shopping cart into your living room, but you’re not using it and it hardly reminds you that it’s part of the Amazon empire at all.

But it wasn’t supposed to be that way. The idea was that you might look up from your work and say “Alexa, order me a six-pack of beer!”, and while it might not come immediately, your six-pack would duly arrive. It was supposed to be a friendly gateway to commerce on the website that has everything, and now they can’t even persuade enough people to give it a celebrity voice for a few bucks.

The Gadget You Love to Hate

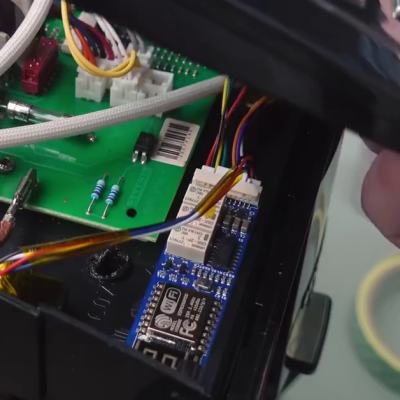

In the first few days after the Echo’s UK launch, a member of my hackerspace installed his one in the space. He soon became exasperated as members learned that “Alexa, add butt plug to my wish list” would do just that. But it was in that joke we could see the problem with the whole idea of Alexa as an interface for commerce. He had locked down all purchasing options, but as it turns out, many people in San Diego hadn’t done the same thing. As the stories rolled in of kids spending hundreds of their parents’ hard-earned on toys, it would be a foolhardy owner who would leave left purchasing enabled. Worse still, while the public remained largely in ignorance the potential of the device for data gathering and unauthorized access hadn’t evaded researchers. It’s fair to say that our community has loved the idea of a device like the Echo, but many of us wouldn’t let one into our own homes under any circumstances.

So Alexa hasn’t been a success, but conversely it’s been a huge sales success in itself. The devices have sold like hot cakes, but since they’ve been sold at close to cost, they haven’t been the commercial bonanza they might have hoped for. But what can be learned from this, other than that the world isn’t ready for a voice activated shopping trolley?

Sadly for most Alexa users it seems that a device piping your actions back to a large company’s data centres is not enough of a concern for them. It’s an easy prediction that Alexa and other services like it will continue to evolve, with inevitable AI pixie dust sprinked on them. A bet could be on the killer app being not a personal assistant but a virtual friend with some connections across a group of people, perhaps a family or a group of friends. In due course we’ll also see locally hosted and open source equivalents appearing on yet-to-be-released hardware that will condense what takes a data centre of today’s GPUs into a single board computer. It’s not often that our community rejoices in being late to a technological party, but I for one want an Alexa equivalent that I control rather than one that invades my privacy for a third party.